Linear Systems of Algebraic Equations

This page presents some topics from Linear Algebra needed for construction of solutions to systems of linear algebraic equations and some applications. We use matrices and vectors as essential elements in obtaining and expressing the solutions.

Matrices and Vectors

The central problem of linear algebra is to solve a linear system of equations. This means that the unknowns are only multiplied by numbers.

Our first example of a linear system has two equations in two unknowns:

\[

\begin{cases}

x-3y &= -1 , \\

2x+y &=5 .

\end{cases}

\]

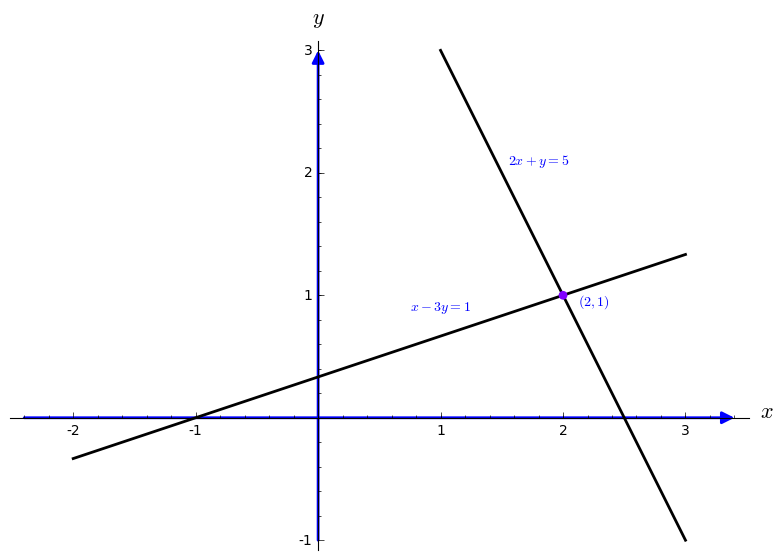

We begin with a row at a time. The first equation \( x-3y=-1 \) produces a straight line in the

\( xy- \) plane. The second equation \( 2x+y=5 \) defines another

line. By plotting these two lines, you cannot miss the intersection point where two lines meet. The point

\( x=2, \ y=1 \) lies on both lines. That point solves both equations at once.

Now we visualize this with a picture:

sage: l1=line([(-2,-1/3), (3,4/3)],thickness=2,color="black")

sage: l2=line([(1,3), (3,-1)],thickness=2,color="black")

sage: ah = arrow((-2.4,0), (3.4,0))

sage: av = arrow((0,-1), (0,3))

sage: P=circle((2.0,1.0),0.03, rgbcolor=hue(0.75), fill=True)

sage: t1=text(r"$(2,1)$",(2.25,0.95))

sage: t2=text(r"$x-3y=1$",(1.0,0.9))

sage: t2=text(r"$x-3y=1$",(1.0,0.9))

sage: t3=text(r"$2x+y=5$",(1.8,2.1))

sage: show(l1+l2+ah+av+t1+t2+t3+P,axes_labels=['$x$','$y$'])

Now we plot another graph:

sage: ah = arrow((-3.1,0), (2.2,0))

sage: av = arrow((0,-0.2), (0,5.1))

sage: a1=arrow([0,0],[-3,1], width=2, arrowsize=3,color="black")

sage: a2=arrow([0,0],[1,2], width=2, arrowsize=3,color="black")

sage: a3=arrow([0,0],[2,4], width=2, arrowsize=3,color="black")

sage: a4=arrow([0,0],[-1,5], width=2, arrowsize=3,color="black")

sage: l1=line([(-3,1), (-1,5)],color="blue",linestyle="dashed")

sage: l2=line([(2,4), (-1,5)],color="blue",linestyle="dashed")

sage: t1=text(r"${\bf v}_1$",(-3,0.85),color="black")

sage: t2=text(r"${\bf v}_2$",(1.1,1.85),color="black")

sage: t3=text(r"$2{\bf v}_2$",(2.05,3.75),color="black")

sage: t4=text(r"${\bf v}_1 + 2{\bf v}_2$",(-0.75,4.75),color="black")

sage: show(av+ah+a1+a2+a3+a4+l1+l2+t1+t2+t3+t4,axes_labels=['$x$','$y$'])

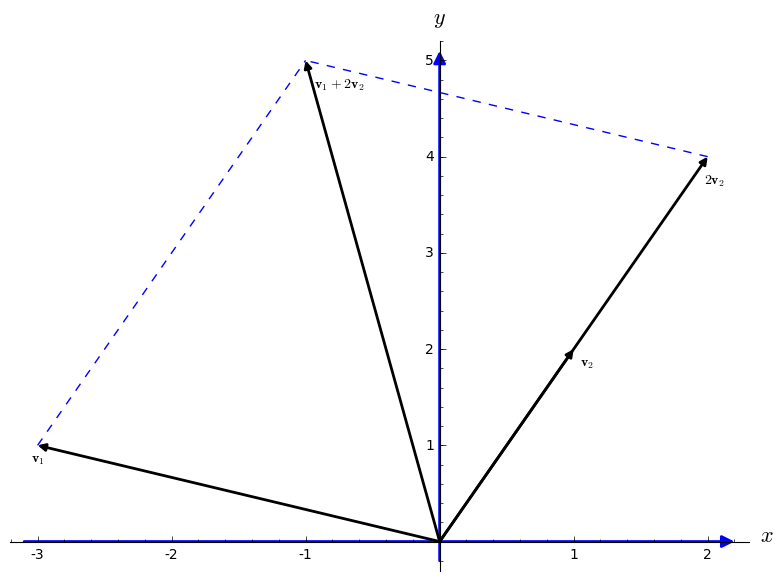

The row picture shows that two lines meet at a single point. Then we turn to the column picture by rewriting the system in vector form:

\[

x \begin{bmatrix}

1 \\

2

\end{bmatrix} + y \begin{bmatrix}

-3 \\

1

\end{bmatrix} = \begin{bmatrix}

-1 \\

5

\end{bmatrix} = {\bf b} .

\]

This has two columns on the left.

The problem is to find the combination of those vectors that equals the vector on the right.

We are multiplying the first column by \( x \) and the second by \( y ,\) and adding.

The figure at the right illustrates two basic operations with vectors: scalar multiplication and vector addition. With these two operations, we are able to build linear combination of vectors:

\[

2 \begin{bmatrix}

1 \\

2

\end{bmatrix} + 1 \begin{bmatrix}

-3 \\

1

\end{bmatrix} = \begin{bmatrix}

-1 \\

5

\end{bmatrix} .

\]

The

coefficient matrix on the left side of the equations is 2 by 2 matrix

A:

\[

{\bf A} = \begin{bmatrix}

1 & -3 \\

2 & 1

\end{bmatrix} .

\]

This allows us to rewrite the given system of linear equations in vector form (also sometimes called the matrix form, but we reserve the matrix form for matrix equations)

\[

{\bf A} \, {\bf x} = {\bf b} ,\qquad\mbox{which is }\quad \begin{bmatrix}

1 & -3 \\

2 & 1

\end{bmatrix} \, \begin{bmatrix}

x \\

y

\end{bmatrix} = \begin{bmatrix}

-1 \\

5

\end{bmatrix} .

\]

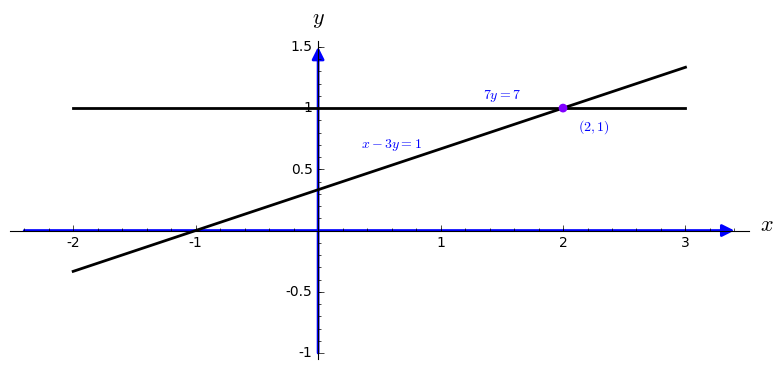

We use the above linear equation to demonstrate a great technique, called elimination. Before elimination,

\( x \)

and

\( y \) appear in both equations. After elimination, the first unknown

\( x \)

has disappeared from the second equation:

\[

{\bf Before: }\quad \begin{cases} x-3y &= -1 , \\

2x+y &=5 .

\end{cases} \qquad {\bf After: } \quad \begin{cases} x-3y &= -1 , \\

7\,y &=7

\end{cases} \qquad \begin{array}{l} \mbox{(multiply by 2 and subtract)} \\

(x \mbox{ has been eliminated).}

\end{array}

\]

The last equation

\( 7\,y =7 \) instantly gives

\( y=1. \) Substituting for

\( y \) in the first equation leaves

\( x -3 = -1. \) Therefore,

\( x=2 \) and the solution

\( (x,y) =(2,1) \) is complete.

sage: l1=line([(-2,-1/3), (3,4/3)],thickness=2,color="black")

sage: l2=line([(-2,1), (3,1)],thickness=2,color="black")

sage: ah = arrow((-2.4,0), (3.4,0))

sage: av = arrow((0,-1), (0,1.5))

sage: P=circle((2.0,1.0),0.03, rgbcolor=hue(0.75), fill=True)

sage: t1=text(r"$(2,1)$",(2.25,0.85))

sage: t2=text(r"$x-3y=1$",(0.6,0.7))

sage: t3=text(r"$7y=7$",(1.5,1.1))

sage: show(l1+l2+ah+av+t1+t2+t3+P,axes_labels=['$x$','$y$'])

Sage solves linear systems

A system of

\( m \) equations in

\( n \) unknowns

\( x_1 , \ x_2 , \ \ldots , \ x_n \) is a set of

\( m \) equations of the form

\[

\begin{cases}

a_{11} \,x_1 + a_{12}\, x_2 + \cdots + a_{1n}\, x_n &= b_1 ,

\\

a_{21} \,x_1 + a_{22}\, x_2 + \cdots + a_{2n}\, x_n &= b_2 ,

\\

\qquad \vdots & \qquad \vdots

\\

a_{m1} \,x_1 + a_{m2}\, x_2 + \cdots + a_{mn}\, x_n &= b_n ,

\end{cases}

\]

The numbers

\( a_{ij} \) are known as the

coefficients of the system. The matrix

\( {\bf A} = [\,a_{ij}\,] , \) whose

\( (i,\, j) \) entry is the

coefficient

\( a_{ij} \) of the system of linear equations is called the coefficient matrix and is denoted by

\[

{\bf A} = \left[ \begin{array}{cccc} a_{1,1} & a_{1,2} & \cdots & a_{1,n} \\

a_{2,1} & a_{2,2} & \cdots & a_{2,n} \\

\vdots & \vdots & \ddots & \vdots \\

a_{m,1} & a_{m,2} & \cdots & a_{m,n} \end{array} \right] \qquad\mbox{or} \qquad {\bf A} = \left( \begin{array}{cccc} a_{1,1} & a_{1,2} & \cdots & a_{1,n} \\

a_{2,1} & a_{2,2} & \cdots & a_{2,n} \\

\vdots & \vdots & \ddots & \vdots \\

a_{m,1} & a_{m,2} & \cdots & a_{m,n} \end{array} \right) .

\]

Let

\( {\bf x} =\left[ x_1 , x_2 , \ldots x_n \right]^T \) be the vector of unknowns. Then the product

\( {\bf A}\,{\bf x} \) of the

\( m \times n \) coefficient matrix

A and the

\( n \times 1 \) column vector

x is an

\( m \times 1 \) matrix

\[

\left[ \begin{array}{cccc} a_{1,1} & a_{1,2} & \cdots & a_{1,n} \\

a_{2,1} & a_{2,2} & \cdots & a_{2,n} \\

\vdots & \vdots & \ddots & \vdots \\

a_{m,1} & a_{m,2} & \cdots & a_{m,n} \end{array} \right] \, \begin{bmatrix} x_1 \\ x_2 \\ \vdots \\ x_n \end{bmatrix}

= \begin{bmatrix} a_{1,1} x_1 + a_{1,2} x_2 + \cdots + a_{1,n} x_n

\\ a_{2,1} x_1 + a_{2,2} x_2 + \cdots + a_{2,n} x_n \\ \vdots \\ a_{m,1} x_1 + a_{m,2} x_2 + \cdots + a_{m,n} x_n \end{bmatrix} ,

\]

whose entries are the right-hand sides of our system of linear equations.

If we define another column vector \( {\bf b} = \left[ b_1 , b_2 , \ldots b_m \right]^T \)

whose components are the right-hand sides \( b_{i} ,\) the system is equivalent to the vector equation

\[

{\bf A} \, {\bf x} = {\bf b} .

\]

We say that \( s_1 , \ s_2 , \ \ldots , \ s_n \) is a solution of the system if all

\( m \) equations hold true when

\[

x_1 = s_1 , \ x_2 = s_2 , \ \ldots , \ x_n = s_n .

\]

Sometimes a system of linear equations is known as a set of simultaneous equations; such terminology emphasises that a solution is an assignment of values to each of the

\( n \) unknowns such that each and every equation holds with this assignment. It is also referred to simply as a linear system.

Example. The linear system

\[

\begin{cases}

x_1 + x_2 + x_3 + x_4 + x_5 &= 3 ,

\\

2\,x_1 + x_2 + x_3 + x_4 + 2\, x_5 &= 4 ,

\\

x_1 - x_2 - x_3 + x_4 + x_5 &= 5 ,

\\

x_1 + x_4 + x_5 &= 4 ,

\end{cases}

\]

is an example of a system of four equations in five unknowns,

\( x_1 , \ x_2 , \ x_3 , \ x_4 , \ x_5 . \)

One solution of this system is

\[

x_1 = -1 , \ x_2 = -2 , \ x_3 =1, \ x_4 = 3 , \ x_5 = 2 ,

\]

as you can easily verify by substituting these values into the equations. Every equation is satisfied for these values of

\( x_1 , \ x_2 , \ x_3 , \ x_4 , \ x_5 . \)

However, there is not the only solution to this system of equations. There are many others.

On the other hand, the system of linear equations

\[

\begin{cases}

x_1 + x_2 + x_3 + x_4 + x_5 &= 3 ,

\\

2\,x_1 + x_2 + x_3 + x_4 + 2\, x_5 &= 4 ,

\\

x_1 - x_2 - x_3 + x_4 + x_5 &= 5 ,

\\

x_1 + x_4 + x_5 &= 6 ,

\end{cases}

\]

has no solution. There are no numbers we can assign to the unknowns

\( x_1 , \ x_2 , \ x_3 , \ x_4 , \ x_5 \) so that all four equations are satisfied. ■

In general, we say that a linear system of algebraic equations is consistent if it has at least one solution and inconsistent if it has no solution. Thus, a consistent linear system has either one solution or infinitely many solutions---there are no other possibilities.

Theorem: Let A be an \( m \times n \) matrix.

-

(Overdetermined case) If m > n, then the linear system \( {\bf A}\,{\bf x} = {\bf b} \)

is inconsistent for at least one vector b in \( \mathbb{R}^n . \)

- (Underdetermined case) If m < n, then for each vector b in \( \mathbb{R}^m \)

the linear system \( {\bf A}\,{\bf x} = {\bf b} \) is either inconsistent or has infinite many solutions. ■

Theorem: If A is an \( m \times n \) matrix with

\( m < n, \) then \( {\bf A}\,{\bf x} = {\bf 0} \) has

infinitely many solutions. ■

Theorem: A system of linear equations \( {\bf A}\,{\bf x} = {\bf b} \) is consistent if and only if b is in the column space of A. ■

Theorem: A linear system of algebraic equations \( {\bf A}\,{\bf x} = {\bf b} \) is consistent if and only if the vector b is orthogonal

to every solution y of the adjoint homogeneous equation \( {\bf A}^T \,{\bf y} = {\bf 0} \) or \( {\bf y}^T {\bf A} = {\bf 0} . \) ■

Sage uses standard commands to solve linear systems of algebraic equations:

\[

{\bf A} \, {\bf x} = {\bf b} , \qquad {\bf A} = \left[ \begin{array}{cccc} a_{1,1} & a_{1,2} & \cdots & a_{1,n} \\

a_{2,1} & a_{2,2} & \cdots & a_{2,n} \\

\vdots & \vdots & \ddots & \vdots \\

a_{m,1} & a_{m,2} & \cdots & a_{m,n} \end{array} \right] , \quad {\bf x} = \begin{bmatrix} x_1 \\ x_2 \\ \vdots \\ x_n \end{bmatrix} , \qquad

{\bf b} = \begin{bmatrix} b_1 \\ b_2 \\ \vdots \\ b_m \end{bmatrix} .

\]

Here b is a given vector and column-vector x is to be determined. An augmented matrix is a matrix

obtained by appending the columns of two given matrices, usually for the purpose of performing the same elementary row operations on each of the given matrices.

We associate with the given system of linear equations \( {\bf A}\,{\bf x} = {\bf b} \) an augmented matrix by appending the column-vector b to the matrix A.

Such matrix is denoted by \( {\bf M} = \left( {\bf A}\,\vert \,{\bf b} \right) \) or \( {\bf M} = \left[ {\bf A}\,\vert \,{\bf b} \right] : \)

\[

{\bf M} = \left( {\bf A}\,\vert \,{\bf b} \right) = \left[ {\bf A}\,\vert \,{\bf b} \right] =

\left[ \begin{array}{cccc|c} a_{1,1} & a_{1,2} & \cdots & a_{1,n} & b_1 \\

a_{2,1} & a_{2,2} & \cdots & a_{2,n} & b_2 \\

\vdots & \vdots & \ddots & \vdots & \vdots\\

a_{m,1} & a_{m,2} & \cdots & a_{m,n} & b_m \end{array} \right] .

\]

Theorem: Let A be an \( m \times n \) matrix. A linear system of equations

\( {\bf A}\,{\bf x} = {\bf b} \) is consistent if and only if the rank of the coefficient matrice A is the same as the rank of the augmented matrix \( \left[ {\bf A}\,\vert \, {\bf b} \right] . \) ■

The actual use of the term augmented matrix appears to have been introduced by the American mathematician Maxime Bocher (1867--1918) in 1907.

We show how it works by examples. The system can be solved using solve command

sage: solve([eq1==0,eq2==0],x1,x2)

However, this is somewhat complex and we use vector approach:

sage: A = Matrix([[1,2,3],[3,2,1],[1,1,1]])

sage: b = vector([0, -4, -1])

sage: x = A.solve_right(b)

sage: x

(-2, 1, 0)

sage: A * x # checking our answer...

(0, -4, -1)

A backslash \ can be used in the place of solve_right; use

A \ b instead of A.solve_right(b).

If there is no solution, Sage returns an error:

sage: A.solve_right(w)

Traceback (most recent call last):

...

ValueError: matrix equation has no solutions

Similarly, use A.solve_left(b) to solve for x in

\( {\bf x}\, {\bf A} = {\bf b}\).

If a square matrix is nonsingular (invertible), then solutions of the vector equation \( {\bf A}\,{\bf x} = {\bf b} \)

can be obtained by applying the inverse matrix:

\( {\bf x} = {\bf A}^{-1} {\bf b} . \) We will discuss this method later in the section devoted to inverse matrices.

Elementary Row Operations

Our objective is to find an efficient method of finding the solutions of systems of linear equations. There are three operations that we can perform on the equations of a linear system without altering the set of solutions:

- multiply both sides of an equation by a non-zero constant;

- interchange two equations;

- add a multiple of one equation to another.

These operations, usually called elementary row operations, do not alter the set of solutions of the linear system

\( {\bf A}\,{\bf x} = {\bf b} \) since the restrictions on the variables

\( x_1 , \, x_2 , \, \ldots , \,x_n \) given by the new equations imply the restrictions given by the old ones. At the same time,

these operations really only involve the coefficients of the variables and the right-hand sides of the equations,

b.

Since the coefficient matrix A together with the given constant vector b contains all the information we need to use, we unite them into one matrix, called the augmented matrix:

\[

{\bf M} = \left( {\bf A}\,\vert \,{\bf b} \right) \qquad \mbox{or} \qquad {\bf M} = \left[ {\bf A}\,\vert \,{\bf b} \right] .

\]

Now rather than manipulating the equations, we can instead manipulate the rows of this augmented matrix. These observations form the motivation behind a method to solve systems of linear equations, known as Gaussian elimination, called after its inventor Carl Friedrich Gauss (1777--1855) from Germany.

Various modifications have been made to the method of elimination, and we present one of these, known as the Gauss--Jordan method.

It was the German geodesist Wilhelm Jordan

(1842-1899) and not a French mathematician Camille Jordan (1838-1922) who introduced the Gauss-Jordan method of solving systems of linear equations. Camille Jordan is credited for Jordan normal form, a

well known linear algebra topic.

Elimination produces an upper triangular system, called row echelon form for Gauss elimination and reduced row echelon form for Gauss--Jordan algorithm. The Gauss elimination introduces zeroes below the pivots, while Gauss--Jordan algorithm contains additional phase in which it introduces zeroes above the pivots.

Since this phase involves roughly 50% more operations than Gaussian elimination, most computer algorithms are based on the latter method.

Suppose M is the augmented matrix of a linear system. We can solve the linear

system by performing elementary row operations on M. In Sage these row operations

are implemented with the functions.

sage: M.swap_rows() # interchange two rows

sage: rescale_row() # scale a row by using a scale factor

sage: add_multiple_of_row() # add a multiple of one row to another row, replacing the row

Remember, row numbering starts at 0. Pay careful attention to the changes made to

the matrix by each of the following commands.

We want to start with a 1 in the first position of the first column, so we begin

by scaling the first row by 1/2.

sage: M.rescale_row(0,1/2); M

The first argument to rescale_row() is the index of the row to be scaled and the

second argument is the scale factor. We could, of course, use 0.5 rather than

1/2 for the scale factor.

Now that there is a 1 in the first position of the first column we continue by

eliminating the entry below it.

sage: M.add_multiple_of_row(1,0,1); M

The first argument is the index of the row to be replaced, the second argument is

the row to form a multiple of, and the final argument is the scale factor. Thus,

M.add_multiple_of_row(n,m,a) would replace row n with (row n)+a*(row m).

Since the last entry in the first column is already zero we can move on to the

second column. Our first step is to get a 1 in the second position of the second

column. Normally we would do this by scaling the row but in this case we can swap

the second and third row

sage: M.swap_rows(1,2); M

The arguments to swap_rows() are fairly obvious, just remember that row 1 is the

second row and row 2 is the third row.

Now we want to eliminate the 5 below the 1. This is done by multiplying the second

row by −5 and adding it to the third row.

sage: M.add_multiple_of_row(2,1,-5); M

To get a 1 as the last entry in the third column we can scale the third row by −1/2

sage: M.rescale_row(2,-1/2); M

At this point, the matrix is in echelon form (well, having the 1's down the diagonal

of the matrix is not required, but it does make our work easier). All that remains

to find the solution is to put the matrix in reduced echelon form which requires

that we replace all entries in the first three columns that are not on the main

diagonal (where the 1's are) with zeros. We will start with the third column and

work our way up and to the left. Remember that this is an augmented matrix and we

are going to ignore the right-most column; it just "goes along for the ride."

sage: M.add_multiple_of_row(1,2,-1); M

sage: M.add_multiple_of_row(0,1,-2); M

Therefore, our solution is complete. We see that the solution to our linear

system is

\( x_1 =2, \ x_2 =1, \ \mbox{and} \ x_3 =-1 . \)

There is an easy way to check your work, or to carry out these steps in the future.

First, let's reload the matrix M.

sage: M=matrix(QQ,[[2,4,0,8],[-1,3,3,-2],[0,1,1,0]]); M

The function echelon_form() will display the echelon form of a matrix without

changing the matrix.

Notice that the matrix

M is unchanged.

To replace

M with its reduced echelon form, use the echelonize() function.

Sage has the matrix method .pivot()

to quickly and easily identify the pivot columns

of the reduced row-echelon form of a matrix. Notice that we do not have to row-

reduce the matrix first, we just ask which columns of a matrix

A would be

the pivot columns of the matrix

B that is row-equivalent to

A and in reduced row-echelon form.

By definition, the indices of the pivot columns for an augmented matrix of a

system of equations are the indices of the dependent variables. And the remainder

are free variables. But be careful, Sage numbers columns starting from zero and

mathematicians typically number variables starting from one.

sage: coeff = matrix(QQ, [[ 1, 4, 0, -1, 0, 7, -9],

... [ 2, 8,-1, 3, 9, -13, 7],

... [ 0, 0, 2, -3, -4, 12, -8],

... [-1, -4, 2, 4, 8, -31, 37]])

sage: const = vector(QQ, [3, 9, 1, 4])

sage: aug = coeff.augment(const),

sage: dependent = aug.pivots()

sage: dependent

(0, 2, 3)

So, incrementing each column index by 1 gives us the same set D

of indices for the

dependent variables. To get the free variables, we can use the following code. Study

it and then read the explanation following.

sage: free = [index for index in range(7) if not index in dependent]

sage: free

[1, 4, 5, 6]

This is a Python programming construction known as a “list comprehension” but

in this setting I prefer to call it “set builder notation.” Let’s dissect the command

in pieces. The brackets ([,]) create a new list. The items in the list will be values

of the variable index .range(7) is another list, integers starting at 0

and stopping justbefore 7. (While perhaps a bit odd, this works very well when we consistently

start counting at zero.) So range(7) is the list

[0,1,2,3,4,5,6]. Think of these as

candidate values for index, which are generated by for index in range(7). Then

we test each candidate, and keep it in the new list if it is

not in the list dependent. This is entirely analogous to the following mathematics:

\[

F= \{ f \, \vert \, 1 \le f \le 7, \ f \not\in D \} ,

\]

where

F is free,

f is index, and

D is dependent, and we make the 0/1 counting

adjustments. This ability to construct sets in Sage with notation so closely mirroring

the mathematics is a powerful feature worth mastering. We will use it repeatedly. It

was a good exercise to use a list comprehension to form the list of columns that are

not pivot columns. However, Sage has us covered.

sage: free_and_easy = coeff.nonpivots()

sage: free_and_easy

[1, 4, 5, 6]

Example.

We illustrate how to find an inverse matrix using row operations. Consider the matrix

\[

{\bf A} = \left[ \begin{array}{ccc} 1& 2 & 3 \\ 2&5&3 \\ 1&0 & 8 \end{array} \right] .

\]

To accomplish this goal, we add a block of the identity matrix and apply row operation to this augmented matrix

\( \left[ {\bf A} \,| \,{\bf I} \right] \) until the left side is reduced to

I.

These operatioons will convert the right side to

\( {\bf A}^{-1} ,\) so the final matrix will have the form

\( \left[ {\bf I} \,| \,{\bf A}^{-1} \right] .\)

\begin{align*}

\left[ {\bf A} \,| \,{\bf I} \right] &= \left[ \begin{array}{ccc|ccc} 1& 2 & 3 & 1&0&0 \\ 2&5&3 & 0&1&0 \\ 1&0 & 8 & 0&0&1 \end{array} \right]

\\

&= \left[ \begin{array}{ccc|ccc} 1& 2 & 3 & 1&0&0 \\ 0&1&-3 & -2&1&0 \\ 0&-2 &5 & -1&0&1 \end{array} \right] \qquad

\begin{array}{l} \mbox{add $-2$ times the first row to the second} \\ \mbox{and $-1$ times the first row to the third} \end{array}

\\

&= \left[ \begin{array}{ccc|ccc} 1& 2 & 3 & 1&0&0 \\ 0&1&-3 & -2&1&0 \\ 0&0 &-1 & -5&2&1 \end{array} \right] \qquad

\begin{array}{l} \mbox{add 2 times the second row to the third} \end{array}

\\

&= \left[ \begin{array}{ccc|ccc} 1& 2 & 3 & 1&0&0 \\ 0&1&-3 & -2&1&0 \\ 0&0 &1 & 5&-2&-1 \end{array} \right] \qquad

\begin{array}{l} \mbox{multiply the third row by $-1$} \end{array}

\\

&= \left[ \begin{array}{ccc|ccc} 1& 2 & 0 & -14&6&3 \\ 0&1&0 & 13&-5&-3 \\ 0&0&1 & 5&-2&-1 \end{array} \right] \qquad

\begin{array}{l} \mbox{add 3 times the third row to the second} \\ \mbox{and $-3$ times the third row to the first} \end{array}

\\

&= \left[ \begin{array}{ccc|ccc} 1& 0 & 0 & -40&16&9 \\ 0&1&0 & 13&-5&-3 \\ 0&0 &1 & 5&-2&-1 \end{array} \right] \qquad

\begin{array}{l} \mbox{add $-2$ times the second row to the first} \end{array} .

\end{align*}

Thus,

\[

{\bf A}^{-1} = \left[ \begin{array}{ccc} -40& 16 & 9 \\ 13&-5&-3 \\ 5&-2 &-1 \end{array} \right] .

\]

Row Echelon Forms

In previous section, we demonstrate how Gauss elimination procedure is applied to a matrix, row by row, in order to obtain row echelon form.

Specifically, a matrix is in row echelon form if

- all nonzero rows (rows with at least one nonzero element) are above any rows of all zeroes (all zero rows, if any, belong at the bottom of the matrix), and

-

the leading coefficient (the first nonzero number from the left, also called the pivot) of a nonzero row is always strictly to the right of the leading coefficient

of the row above it (some texts add the condition that the leading coefficient must be 1 but it is not necessarily).

These two conditions imply that all entries in a column below a leading coefficient are zeroes.

Unlike the row echelon form, the reduced row echelon form of a matrix is unique and

does not depend on the algorithm used to compute it. It is obtained by applying the Gauss-Jordan elimination procedure.

A matrix is in reduced row echelon form (also called row canonical form) if it satisfies the following conditions:

- It is in row echelon form.

-

Every leading coefficient is 1 and is the only nonzero entry in its column.

Gauss elimination algorithm can be generalized for block matrices. Suppose that a \( (m+n) \times (m+n) \) matrix M is

partitioned into 2-by-2 blocks \( {\bf M} = \begin{bmatrix} {\bf A} & {\bf B} \\ {\bf C} & {\bf D} \end{bmatrix} .\)

When we apply Gauss elimination algorithm, A is just an entry (not zero) in upper left corner (which is 1 by 1 matrix). and

C is the column-vector (\( (m+n-1) \times 1 \) matrix). To eliminate C, we just multiply it by

\( {\bf A}^{-1} \) and subtract the corresponding block.

Now we illustrate Gauss elimination algorithm when \( (m+n) \times (m+n) \) matrix M is

partitioned into blocks \( {\bf M} = \begin{bmatrix} {\bf A} & {\bf B} \\ {\bf C} & {\bf D} \end{bmatrix} ,\)

where A is \( n \times n \) nonsingular matrix, B is \( n \times m \) matrix,

C is \( m \times n \) matrix, and D is \( m \times m \) square matrix. Multiplying M

by a "triangular" block matrix, we obtain

\[

\left[ \begin{array}{c|c} {\bf I}_{n \times n} & {\bf 0}_{n\times m} \\ \hline -{\bf C}_{m\times n} {\bf A}^{-1}_{n\times n} & {\bf I}_{m\times m} \end{array} \right]

\left[ \begin{array}{c|c} {\bf A}_{n\times n} & {\bf B}_{n\times m} \\ \hline {\bf C}_{m\times n} & {\bf D}_{m\times m} \end{array} \right] =

\left[ \begin{array}{c|c} {\bf A}_{n\times n} & {\bf B}_{n\times m} \\ \hline {\bf 0}_{m\times n} & {\bf D}_{m\times m} - {\bf C}\,{\bf A}^{-1} {\bf B} \end{array} \right] .

\]

The

\( m \times m \) matrix in right bottom corner,

\( {\bf D}_{m\times m} - {\bf C}\,{\bf A}^{-1} {\bf B} ,\) is called

Schur complement.

Recall the difference between row echelon form (also called Gauss form) and reduced row echelon form (Gauss--Jordan). The following matrices are in row echelon form but not reduced row echelon form:

\[

\left[ \begin{array}{cccc} 1 & * & * & * \\ 0 & 1 & * & * \\ 0&0&1&* \\ 0&0&0&1

\end{array} \right] , \qquad \left[ \begin{array}{cccc} 1 & * & * & * \\ 0 & 1 & * & * \\ 0&0&1&* \\ 0&0&0&0

\end{array} \right] , \qquad \left[ \begin{array}{cccc} 1 & * & * & * \\ 0 & 1 & * & * \\ 0&0&0&0 \\ 0&0&0&0

\end{array} \right] , \qquad \left[ \begin{array}{cccc} 1 & * & * & * \\ 0 & 0 & 1 & * \\ 0&0&0&1 \\ 0&0&0&0

\end{array} \right] .

\]

All matrices of the following types are in reduced row echelon form:

\[

\left[ \begin{array}{cccc} 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0&0&1&0 \\ 0&0&0&1

\end{array} \right] , \qquad \left[ \begin{array}{cccc} 1 & 0 & 0 & * \\ 0 & 1 & 0 & * \\ 0&0&1&* \\ 0&0&0&0

\end{array} \right] , \qquad \left[ \begin{array}{cccc} 1 & 0 & * & * \\ 0 & 1 & * & * \\ 0&0&0&0 \\ 0&0&0&0

\end{array} \right] , \qquad \left[ \begin{array}{cccc} 0 & 1 & * & 0 \\ 0 & 0 & 0 & 1 \\ 0&0&0&0 \\ 0&0&0&0

\end{array} \right] .

\]

This is an example of using row reduction procedure:

sage: A=matrix(QQ,3,3,[2,-4,1,4,-8,7,-2,4,-3])

sage: b=matrix(QQ,3,1,[4,2,5])

sage: B=A.augment(b) # construct augmented matrix

sage: B.add_multiple_of_row(0,0,-1/2)

[ 1 -2 1/2 2]

[ 4 -8 7 2]

[-2 4 -3 5]

sage: B.add_multiple_of_row(1,0,-4)

[ 1 -2 1/2 2]

[ 0 0 5 -6]

[-2 4 -3 5]

sage: B.add_multiple_of_row(2,0,2)

[ 1 -2 1/2 2]

[ 0 0 5 -6]

[ 0 0 -2 9]

sage: B.add_multiple_of_row(1,1,-4/5)

[ 1 -2 1/2 2 ]

[ 0 0 1 -6/5]

[ 0 0 -2 9 ]

sage: B.add_multiple_of_row(2,1,2)

[ 1 -2 1/2 2]

[ 0 0 1 -6/5]

[ 0 0 0 33/5]

Therefore, we obtained Gauss form for the augmented matrix. Now we need to repeat similar procedure to obtain Gauss-Jordan form. This can be achieved with a standard Sage command:

sage: B.echelon_form()

[ 1 -2 0 0]

[ 0 0 1 0]

[ 0 0 0 1]

We demonstrate Gauss-Jordan procedure in the following example.

Example. Solve the linear vector equation \( {\bf A}\,{\bf x} = {\bf b} , \) where

\[

{\bf A} = \left[ \begin{array}{ccc} -4 &1&1 \\ -1 &4&2 \\ 2&2&-3 \end{array} \right] , \qquad {\bf b} = \left[ \begin{array}{c}

6 \\ -1 \\ 20 \end{array} \right] .

\]

sage: A=matrix(QQ,3,3,[[-4,1,1],[-1,4,2],[2,2,-3]]);A

[ -4 1 1]

[ -1 4 2]

[ 2 2 -3]

sage: A.swap_rows(0,1);A

[ -1 4 2]

[ -4 1 1]

[ 2 2 -3]

sage: A.swap_columns(0,1);A

[ 1 -4 2]

[ 4 -1 1]

[ 2 2 -3]

sage: b=vector([6,-1,-20])

sage: B=A.augment(b);B

[ -4 1 1 6]

[ -1 4 2 -1]

[ 2 2 -3 -20]

sage: B.rref()

[ 1 0 0 -61/55]

[ 0 1 0 -144/55]

[ 0 0 1 46/11]

So the last column gives the required vector x.

Another way of expressing to say a system is consistent if and only

if column

n + 1 is not a pivot column of

B

. Sage has the matrix method

.pivot()

to

easily identify the pivot columns of a matrix. Let’s consider as an example.

sage: coeff = matrix(QQ, [[ 2, 1, 7, -7],

... [-3, 4, -5, -6],

... [ 1, 1, 4, -5]])

sage: const = vector(QQ, [2, 3, 2])

sage: aug = coeff.augment(const)

sage: aug.rref()

[1 0 3-2 0]

[0 1 1-3 0]

[0 0 0 0 1]

sage: aug.pivots()

(0, 1, 4)

We can look at the reduced row-echelon form of the augmented matrix and see

a leading one in the final column, so we know the system is inconsistent. However,

we could just as easily not form the reduced row-echelon form and just look at the

list of pivot columns computed by

aug.pivots(). Since aug has 5 columns, the final

column is numbered 4, which is present in the list of pivot columns, as expected.

Example. We demonstrate the Gaussian method with Gauss--Jordan elimination by solving the same system of algebraic equations with two distinct given vectors:

\[

\begin{split}

x_1 + 2\,x_2 + 3\,x_3 &= 4,

\\

2\,x_1 + 5\,x_2 + 3\,x_3 &= 5, \\

x_1 + 8\,x_3 &= 9 ;

\end{split} \qquad\mbox{and} \qquad \begin{split}

x_1 + 2\,x_2 + 3\,x_3 &= 1,

\\

2\,x_1 + 5\,x_2 + 3\,x_3 &= 6, \\

x_1 + 8\,x_3 &= -6 .

\end{split}

\]

The row echelon form of the former system of equations is

\[

\left[ \begin{array}{ccc|c} 1&2&3&4 \\ 0&1&-3&-3 \\ 0&0&-1&-1

\end{array} \right] .

\]

Therefore its solution becomes

\( x_1 = 1, \ x_2 =0, \ x_3 =1 . \) The augmented matrix for the

latter system can be reduced to

\[

\left[ \begin{array}{ccc|c} 1&0&0&2 \\ 0&1&0&1 \\ 0&0&1&-1

\end{array} \right] ,

\]

which leads to the solution

\( x_1 = 2, \ x_2 =1, \ x_3 =-1 . \)

Example. What conditions must \( b_1 , \) \( b_2 , \) and

\( b_3 \) satisfy in order for the system

\[

\begin{split}

x_1 + x_2 + 2\,x_3 &= b_1 ,

\\

x_1 + x_3 &= b_2 , \\

2\,x_1 + x_2 + 3\,x_3 &= b_3

\end{split}

\]

to be consistent?

The augmented matrix corresponding to the symmetric coefficient matrix is

\[

\left[ \begin{array}{ccc|c}

1&1&2& b_1 \\

1&0&1& b_2 \\

2&1&3& b_3 \end{array} \right] ,

\]

which can be reduced to row echelon form as follows

\begin{align*}

\left[ {\bf A} \,| \,{\bf b} \right] &= \left[ \begin{array}{ccc|c} 1&1&2& b_1 \\

0&-1&-1& b_2 -b_1 \\

0&-1&-1& b_3 - 2\,b_1 \end{array} \right] \qquad \begin{array}{l} -1 \mbox{ times the first row was added to the} \\

\mbox{second and $-1$ times the first row was added to the third} \end{array}

\\

&= \left[ \begin{array}{ccc|c} 1&1&2& b_1 \\

0&1&1& b_1 - b_2 \\ 0&-1&-1& b_3 -2\, b_1 \end{array} \right] \qquad \begin{array}{l}

\mbox{the second row was } \\ \mbox{multiplied by $-1$} \end{array}

\\

&= \left[ \begin{array}{ccc|c} 1&1&2& b_1 \\

0&1&1& b_1 - b_2 \\

0&0&0& b_3 - b_2 - b_1 \end{array} \right] \qquad \begin{array}{l} \mbox{the second row was added} \\

\mbox{to the third} \end{array} .

\end{align*}

It is now evident from the third row in the matrix that the system has a solution if and only if

\( b_1 , \) \( b_2 , \) and

\( b_3 \) satisfy the condition

\[

b_3 - b_2 -b_1 =0 \qquad\mbox{or} \qquad b_3 = b_1 + b_2 .

\]

To express this condition another way,

\( {\bf A}\, {\bf x} = {\bf b} \) is consistent if and only if

b is the vector of the form

\[

{\bf b} = \begin{bmatrix} b_1 \\ b_2 \\ b_1 + b_2 \end{bmatrix} = b_1 \begin{bmatrix} 1 \\ 0 \\ 1 \end{bmatrix} + b_2

\begin{bmatrix} 0 \\ 1 \\ 1 \end{bmatrix} ,

\]

where

\( b_1 \) and

\( b_2 \) are arbitrary. The vectors

\( \langle 1, 0 , 1 \rangle^T ,\) \( \langle 0 , 1, 1 \rangle^T \)

constudute the basis in the column space of the coefficient matrix. ■

LU-factorization

Recall that in Gaussian

Elimination, row operations are used to change the coefficient matrix to an upper

triangular matrix. The solution is then found by back substitution, starting from the

last equation in the reduced system. In Gauss-Jordan Reduction, row operations are

used to diagonalize the coefficient matrix, and the answers are read directly.

The goal of this section is to identify Gaussian elimination with LU factorization. The original matrix A

becomes the product of two or more special matrices that are actually triangular matrices. Namely, the factorization comes from elimination:

\( {\bf A} = {\bf L}\,{\bf U} , \) where L is lower triangular matrix and U is upper triangular matrix.

Such representation is called and LU-decomposition of LU-factorization. Computers

usually solve square systems of linear equations using the LU decomposition, and it is also a key step when inverting

a matrix, or computing the determinant of a matrix. The LU decomposition was introduced by a

Polish astronomer, mathematician, and geodesist Tadeusz Banachiewicz (1882--1954) in 1938.

For a single linear system \( {\bf A}\,{\bf x} = {\bf b} \) of n equations in n

unknowns, the methods LU-decomposition and Gauss-Jordan elimination differ in bookkeeping but otherwise involve the same number of flops. However,

LU-factorization has the following advantages:

- Gaussian elimination and Gauss--Jordan elimination both use the augmented matrix \( \left[ {\bf A} \, | \, {\bf b} \right] ,\)

so b must be known. In contrast, LU-decomposition uses only matrix A, so once that factorization is complete, it can be applied to any vector b.

- For large linear systems in which computer memory is at a premium, one can dispense with the storage of the 1's

and zeroes that appear on or below the main diagonal of U, since those entries are known. The space

that this opens up can then be used to store the entries of L.

Not every square matrix has an LU-factorization. However, if it is possible to reduce a square matrix A to row

echelon form by Gaussian elimination without performing any row interchanges, then A will have an LU-decomposition, though

it may not be unique.

LU-decomposition:

Step 1: rewrite the system of algebraic equations \( {\bf A} {\bf x} = {\bf b} \) as

\[

{\bf L}{\bf U} {\bf x} = {\bf b} .

\]

Step 2: Define a new

\( n\times 1 \) matrix

y (which is actually a vector)

by

\[

{\bf U} {\bf x} = {\bf y} .

\]

Step 3:

Rewrite the given equation as

\( {\bf L} {\bf y} = {\bf b} \) and solve this sytem for

y.

Step 4: Substitute y into the equation

\( {\bf U} {\bf x} = {\bf y} \) and solve for

x.

Procedure for constructing LU-decomposition:

Step 1: Reduce \( n \times n \) matrix A

to a row echelon form U by Gaussian elimination without row interchanges, keeping track of the multipliers

used to introduce the leading coefficients (usually 1's) and multipliers used to introduce the zeroes below

the leading coefficients.

Step 2: In each position along the main diagonal L, place the reciprocal of the multiplier

that introduced the leading 1 in that position of U.

Step 3:

In each position below the main diagonal of L, place the negative of the multiplier used to introduce the zero

in that position of U.

Step 4: For the decomposition \( {\bf A} = {\bf L} {\bf U} . \)

Recall the elementary operations on the rows of a matrix that are

equivalent to premultiplying by an elementary matrix E:

(1) multiplying row i by a nonzero scalar α , denoted by \( {\bf E}_i (\alpha ) ;\)

(2) adding β times row j to row i, denoted by \( {\bf E}_{ij} (\beta ) \) (here β is any

scalar), and

(3) interchanging rows i and j, denoted by \( {\bf E}_{ij} \) (here \( i \ne j \) ),

called elementary row operations of types 1,2 and 3, respectively.

Correspondingly, a square matrix E is called an elementary matrix if it can be obtained

from an identity matrix by performing a single elementary operation.

Theorem: Every elementary matrix is invertible, and the inverse is also an elementary matrix. ■

Example.

Illustrations for m = 4:

\[

{\bf E}_3 (\alpha ) = \begin{bmatrix} 1&0&0&0 \\ 0&1&0&0 \\ 0&0&\alpha &0 \\ 0&0&0&1 \end{bmatrix} , \qquad

{\bf E}_{42} (\beta ) = \begin{bmatrix} 1&0&0&0 \\ 0&1&0&0 \\ 0&0&1 &0 \\ 0&\beta&0&1 \end{bmatrix} , \qquad

{\bf E}_{13} = \begin{bmatrix} 0 &0&1&0 \\ 0&1&0&0 \\ 1&0&0 &0 \\ 0&0&0&1 \end{bmatrix} .

\]

Correspondingly, we have their inverses:

\[

{\bf E}_3^{-1} (\alpha ) = \begin{bmatrix} 1&0&0&0 \\ 0&1&0&0 \\ 0&0&\alpha^{-1} &0 \\ 0&0&0&1 \end{bmatrix} , \qquad

{\bf E}_{42}^{-1} (\beta ) = \begin{bmatrix} 1&0&0&0 \\ 0&1&0&0 \\ 0&0&1 &0 \\ 0&-\beta&0&1 \end{bmatrix} , \qquad

{\bf E}_{13}^{-1} = \begin{bmatrix} 0 &0&1&0 \\ 0&1&0&0 \\ 1&0&0 &0 \\ 0&0&0&1 \end{bmatrix} .

\]

Multiplying by an arbitrary matrix

\[

{\bf A} = \begin{bmatrix} a_{11} & a_{12} & a_{13} & a_{14} \\ a_{21} & a_{22} & a_{23} & a_{24} \\

a_{31} & a_{32} & a_{33} & a_{34} \\ a_{41} & a_{42} & a_{43} & a_{44} \end{bmatrix} ,

\]

we obtain

\begin{align*}

{\bf E}_3 (\alpha )\,{\bf A} &= \begin{bmatrix} a_{11} & a_{12} & a_{13} & a_{14} \\ a_{21} & a_{22} & a_{23} & a_{24} \\

\alpha \,a_{31} & \alpha \,a_{32} & \alpha \,a_{33} & \alpha \,a_{34} \\ a_{41} & a_{42} & a_{43} & a_{44} \end{bmatrix} , \quad

{\bf A} \, {\bf E}_3 (\alpha ) = \begin{bmatrix} a_{11} & a_{12} & \alpha \,a_{13} & a_{14} \\ a_{21} & a_{22} & \alpha \,a_{23} & a_{24} \\

a_{31} & a_{32} & \alpha \,a_{33} & a_{34} \\ a_{41} & a_{42} & \alpha \,a_{43} & a_{44} \end{bmatrix} ,

\\

{\bf E}_{42} (\beta ) \, {\bf A} &= \begin{bmatrix} a_{11} & a_{12} & a_{13} & a_{14} \\ a_{21} & a_{22} & a_{23} & a_{24} \\

\alpha \,a_{31} & \alpha \,a_{32} & \alpha \,a_{33} & \alpha \,a_{34} \\ a_{41} + \beta \,a_{21} & a_{42} + \beta \,a_{22} & a_{43} + \beta \,a_{23}& a_{44} + \beta \,a_{24} \end{bmatrix} , \quad

\\

{\bf A} \, {\bf E}_{42} (\beta ) &= \begin{bmatrix} a_{11} & a_{12} + \beta\, a_{14} & a_{13} & a_{14} \\ a_{21} & a_{22} + \beta \,a_{24}& a_{23} & a_{24} \\

a_{31} & a_{32} + \beta\, a_{34}& a_{33} & a_{34} \\ a_{41} & a_{42} + \beta \,a_{44}& a_{43} & a_{44} \end{bmatrix} ,

\\

{\bf E}_{13} \,{\bf A} &= \begin{bmatrix} a_{31} & a_{32} & a_{33} & a_{34} \\ a_{21} & a_{22} & a_{23} & a_{24} \\

a_{11} & a_{12} & a_{13} & a_{14} \\ a_{41} & a_{42} & a_{43} & a_{44} \end{bmatrix} = {\bf A}\, {\bf E}_{13} .

\end{align*}

Example.

We find LU-factorization of

\[

{\bf A} = \begin{bmatrix} 2&6&2 \\ -3&-8&0 \\ 4&9&2 \end{bmatrix} .

\]

To achieve this, we reduce A to a row echelon form U using Gaussian elimination and then calculate L by inverting the product of elementary matrices.

| Reduction |

Row operation |

Elementary matrix |

Inverse matrix |

| \( \begin{bmatrix} 2&6&2 \\ -3&-8&0 \\ 4&9&2 \end{bmatrix} \) |

|

|

|

| Step 1: |

\( \frac{1}{2} \times \,\mbox{row 1} \) |

\( {\bf E}_1 = \begin{bmatrix} 1/2&0&0 \\ 0&1&0 \\ 0&0&1 \end{bmatrix} \) |

\( {\bf E}_1^{-1} = \begin{bmatrix} 2&0&0 \\ 0&1&0 \\ 0&0&1 \end{bmatrix} \) |

| \( \begin{bmatrix} 1&3&1 \\ -3&-8&0 \\ 4&9&2 \end{bmatrix} \) |

|

|

|

| Step 2 |

\( 3 \times \,\mbox{row 1} + \mbox{row 2}\) |

\( {\bf E}_2 = \begin{bmatrix} 1&0&0 \\ 3&1&0 \\ 0&0&1 \end{bmatrix} \) |

\( {\bf E}_2^{-1} = \begin{bmatrix} 1&0&0 \\ -3&1&0 \\ 0&0&1 \end{bmatrix} \) |

| \( \begin{bmatrix} 1&3&1 \\ 0&1&3 \\ 4&9&2 \end{bmatrix} \) |

|

|

|

| Step 3 |

\( -4 \times \,\mbox{row 1} + \mbox{row 3}\) |

\( {\bf E}_3 = \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ -4&0&1 \end{bmatrix} \) |

\( {\bf E}_3^{-1} = \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 4&0&1 \end{bmatrix} \) |

| \( \begin{bmatrix} 1&3&1 \\ 0&1&3 \\ 0&-3&-2 \end{bmatrix} \) |

|

|

|

| Step 4 |

\( 3 \times \,\mbox{row 2} + \mbox{row 3}\) |

\( {\bf E}_4 = \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 0&3&1 \end{bmatrix} \) |

\( {\bf E}_4^{-1} = \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 0&-3&1 \end{bmatrix} \) |

| \( \begin{bmatrix} 1&3&1 \\ 0&1&3 \\ 0&0&7 \end{bmatrix} \) |

|

|

|

| Step 5 |

\( \frac{1}{7} \times \,\mbox{row 3} \) |

\( {\bf E}_5 = \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 0&0&1/7 \end{bmatrix} \) |

\( {\bf E}_5^{-1} = \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 0&0&7 \end{bmatrix} \) |

| \( \begin{bmatrix} 1&3&1 \\ 0&1&3 \\ 0&0&1 \end{bmatrix} = {\bf U}\) |

|

|

|

Now we find the lower triangular matrix:

\begin{align*}

{\bf L} &= {\bf E}_1^{-1} {\bf E}_2^{-1} {\bf E}_3^{-1} {\bf E}_4^{-1} {\bf E}_5^{-1}

\\

&= \begin{bmatrix} 2&0&0 \\ 0&1&0 \\ 0&0&1 \end{bmatrix} \begin{bmatrix} 1&0&0 \\ -3&1&0 \\ 0&0&1 \end{bmatrix}

\begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 4&0&1 \end{bmatrix} \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 0&-3&1 \end{bmatrix} \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 0&0&7 \end{bmatrix}

\\

&= \begin{bmatrix} 2&0&0 \\ -3&1&0 \\ 4&-3&7 \end{bmatrix} ,

\end{align*}

so

\[

{\bf A} = \begin{bmatrix} 2&6&2 \\ -3&-8&0 \\ 4&9&2 \end{bmatrix} = \begin{bmatrix} 2&0&0 \\ -3&1&0 \\ 4&-3&7 \end{bmatrix}\, \begin{bmatrix} 1&3&1 \\ 0&1&3 \\ 0&0&1 \end{bmatrix} = {\bf L}\,{\bf U} .

\]

Example. Consider the \( 3\times 4 \) matrix

\[

{\bf A} = \begin{bmatrix} 1&0&2&3 \\ 2&-1&3&6 \\ 1&4&4&0 \end{bmatrix}

\]

and consider the elementary matrix

\[

{\bf E}_{31} (-1) = \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ -1&0&1 \end{bmatrix} ,

\]

which results from adding

\( -1 \) times the first row of

\( {\bf I}_3 \) to the thrid row. The product

\( {\bf E}_{31} (-1)\, {\bf A} \) is

\[

{\bf E}_{31} (-1)\,\, {\bf A} = \begin{bmatrix} 1&0&2&3 \\ 2&-1&3&6 \\ 0&4&2&-3 \end{bmatrix} ,

\]

which is precisely the matrix that results when we add

\( -1 \) times the first row of

A to the third row. ■

PLU Factorization

So far, we tried to represent a square nonsingular matrix

A as a product of a lower-triangular matrix

L and an upper triangular matrix

U:

\( {\bf A} = {\bf L}\,{\bf U} .\)

When this is possible we say that

A has an LU-decomposition (or factorization). It turns out that

this factorization (when it exists) is not unique. If

L has 1's on it's diagonal, then it is called

a Doolittle factorization. If

U has 1's on its diagonal, then it is called a Crout factorization.

When

L is an unitary matrix, it is called a Cholesky decomposition. Moreover, Gaussian elimination in its pure form may be unstable.

While LU-decomposition is a useful computational tool, but this does not work for

every matrix. Consider even the simple example with matrix

\[

{\bf A} = \begin{bmatrix} 0&2 \\ 3 & 0 \end{bmatrix} .

\]

Another cause of instability in Gaussian elimination is when encountering relatively small pivots.

Gaussian elimination is guaranteed to succeed if row or column interchanges are used in order to

avoid zero pivots when using exact calculations. But, when computing the LU factorization

numerically, this is not necessarily true. When a calculation is undefined because of division by zero, the

same calculation will suffer numerical difficulties when there is division by a nonzero number that

is relatively small.

\[

{\bf A} = \begin{bmatrix} 10^{-22}&2 \\ 1 & 3 \end{bmatrix} .

\]

When computing the factors

L and

U, the process does not fail in this case because there is no

division by zero.

\[

{\bf L} = \begin{bmatrix} 1&0 \\ 10^{22} & 1 \end{bmatrix} , \qquad {\bf U} = \begin{bmatrix} 10^{-22}&2 \\ 0 & 3 - 2\cdot 10^{22} \end{bmatrix} .

\]

When these computations are performed in floating point arithmetic, the number

\( 3 - 2\cdot 10^{22} \)

is not represented exactly but will be rounded to the nearest floating point number which we will say

is

\( 2\cdot 10^{22} . \) This means that our matrices are now floating point matrices

L' and

U':

\[

{\bf L}' = \begin{bmatrix} 1&0 \\ 10^{22} & 1 \end{bmatrix} , \qquad {\bf U}' = \begin{bmatrix} 10^{-22}&2 \\ 0 & - 2\cdot 10^{22} \end{bmatrix} .

\]

When we compute

L'U', we get

\[

{\bf L}' {\bf U}' = \begin{bmatrix} 10^{-22}&2 \\ 1 & 0 \end{bmatrix} .

\]

The answer should be equal to

A, but obviously that is not the case. The 3 in position

(2,2) of matrix

A is now 0. Also, when trying to solve a system such as

\( {\bf A} \, {\bf x} = {\bf b} \)

using the LU factorization, the factors

L'U' would not give you a correct answer. The

LU factorization was a stable computation but not backward stable.

Recall that an \( n \times n \) permutation matrix P is a matrix with

precisely one entry whose value is "1" in each column and row, and all of whose other entries are "0." The rows of

P are a permutation of the rows of the identity matrix. Since not every matrix has LU decomposition,

we try to find a permulation matrix P such that PA has LU factorization:

\( {\bf P}\,{\bf A} = {\bf L}\,{\bf U} , \) where L and U

are again lower and upper triangular matrices, and P is a permutation matrix. In this case, we say that A has a PLU factorization.

It is also referred to as the LU factorization with Partial Pivoting (LUP) with row permutations only. An

LU factorization with full pivoting involves both row and column permutations, \( {\bf P}\,{\bf A}\, {\bf Q} = {\bf L}\,{\bf U} , \)

where L and U, and P are defined as before, and Q is a permutation matrix that reorders the columns of A.

For a permutation matrix P, the product PA is a new matrix whose rows consists

of the rows of A rearranged in the new order. Note that a product of permutation matrices is a permutation matrix.

Every permutation matrix is an orthogonal matrix: \( {\bf P}^{-1} = {\bf P}^{\mathrm T} . \)

Example. Let P be a permutation matrix that interchange rows 1 and 2 and

also interchange rows 3 and 4:

\[

{\bf P}\, {\bf A} = \begin{bmatrix} 0&1&0&0 \\ 1&0&0&0 \\ 0&0&0&1 \\ 0&0&1&0 \end{bmatrix}

\begin{bmatrix} a_{1,1}&a_{1,2}&a_{1,3}&a_{1,4} \\ a_{2,1}&a_{2,2}&a_{2,3}&a_{2,4} \\ a_{3,1}&a_{3,2}&a_{3,3}&a_{3,4} \\ a_{4,1}&a_{4,2}&a_{4,3}&a_{4,4} \end{bmatrix} =

\begin{bmatrix} a_{2,1}&a_{2,2}&a_{2,3}&a_{2,4} \\ a_{1,1}&a_{1,2}&a_{1,3}&a_{1,4} \\ a_{4,1}&a_{4,2}&a_{4,3}&a_{4,4} \\ a_{3,1}&a_{3,2}&a_{3,3}&a_{3,4} \end{bmatrix} .

\]

However,

\[

{\bf A} {\bf P} = \begin{bmatrix} a_{1,1}&a_{1,2}&a_{1,3}&a_{1,4} \\ a_{2,1}&a_{2,2}&a_{2,3}&a_{2,4} \\ a_{3,1}&a_{3,2}&a_{3,3}&a_{3,4} \\ a_{4,1}&a_{4,2}&a_{4,3}&a_{4,4} \end{bmatrix}

\begin{bmatrix} 0&1&0&0 \\ 1&0&0&0 \\ 0&0&0&1 \\ 0&0&1&0 \end{bmatrix} =

\begin{bmatrix} a_{1,2}&a_{1,1}&a_{1,4}&a_{1,3} \\ a_{2,2}&a_{2,1}&a_{2,4}&a_{2,3} \\ a_{3,2}&a_{3,1}&a_{3,4}&a_{3,3} \\ a_{4,2}&a_{4,1}&a_{4,4}&a_{4,3} \end{bmatrix} .

\]

Theorem: If A is a nonsingular matrix, then there exists a

permutation matrix P so that PA has an LU-factorization.

■

Pivoting for LU factorization is the process of systematically selecting pivots for Gaussian elimination during

the LU-decomposition of a matrix. The LU factorization is closely related to Gaussian

elimination, which is unstable in its pure form. To guarantee the elimination process goes to completion, we must

ensure that there is a nonzero pivot at every step of the elimination process. This is the reason we need pivoting

when computing LU decompositions. But we can do more with pivoting than just making sure Gaussian elimination

completes. We can reduce roundoff errors during computation and make our algorithm backward stable by implementing

the right pivoting strategy. Depending on the matrix A, some LU

decompositions can become numerically unstable if relatively

small pivots are used. Relatively small pivots cause instability because they operate very similar

to zeroes during Gaussian elimination. Through the process of pivoting, we can greatly reduce this

instability by ensuring that we use relatively large entries as our pivot elements. This prevents large

factors from appearing in the computed L and U, which reduces roundoff errors during computation.

The goal of partial pivoting is to use a permutation matrix to place the largest entry of the first

column of the matrix at the top of that first column. For an \( n \times n \)

matrix A, we scan n rows of the first column for the largest value. At step k

of the elimination, the pivot we choose is the largest of the n- (k+1)

subdiagonal entries of column k, which costs O(nk) operations for each step of the

elimination. So for an n-by-n matrix, there is a total of \( O(n^2 ) \)

comparisons. Once located, this entry is then moved into the pivot position

\( A_{k,k} \) on the diagonal of the matrix.

Example. Use PLU factorization to solve the linear system

\[

\begin{bmatrix} 2&4&2 \\ 4&-10&2 \\ 1&2&4 \end{bmatrix} \begin{bmatrix} x_1 \\ x_2 \\ x_3 \end{bmatrix} =

\begin{bmatrix} 5 \\ -8 \\ 13 \end{bmatrix} .

\]

We want to use a permutation matrix to place the largest entry of the first column

into the position

(1,1). For this matrix, this means we want 4 to be the first entry of the first column.

So we use the permutation matrix

\[

{\bf P}_1 = \begin{bmatrix} 0&1&0 \\ 1&0&0 \\ 0&0&1 \end{bmatrix} \qquad \Longrightarrow \qquad {\bf P}_1 {\bf A} =

\begin{bmatrix} 4&-10&2 \\ 2&4&2 \\ 1&2&4 \end{bmatrix} .

\]

Then we use the matrix

M to eliminate the bottom two entries of the first column:

\[

{\bf M}_1 = \begin{bmatrix} 1&0&0 \\ -2/4&1&0 \\ -1/4&0&1 \end{bmatrix} \qquad \Longrightarrow \qquad {\bf M}_1 {\bf P}_1 {\bf A} =

\begin{bmatrix} 4&-10&2 \\ 0&9&1 \\ 0&9/2&7/2 \end{bmatrix} .

\]

For latter matrix we don't need pivoting because the middle of the second row is the largest. So we just multiply by

\[

{\bf M}_2 = \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 0&-1/2&1 \end{bmatrix} \qquad \Longrightarrow \qquad {\bf M}_2 {\bf M}_1 {\bf P}_1 {\bf A} =

\begin{bmatrix} 4&-10&2 \\ 0&9&1 \\ 0&0&3 \end{bmatrix} .

\]

Since the inverse of

\( {\bf M}_2 {\bf M}_1 \) is the required lower triangular matrix

\[

{\bf M}_2 {\bf M}_1 = \begin{bmatrix} 1&0&0 \\ -1/2&1&0 \\ 0&-1/2&1 \end{bmatrix} \qquad \Longrightarrow \qquad

{\bf L} = \left( {\bf M}_2 {\bf M}_1 \right)^{-1} =

\begin{bmatrix} 1&0&0 \\ 1/2&1&0 \\ 1/4&1/2&1 \end{bmatrix} ,

\]

we have

\[

{\bf A} = \begin{bmatrix} 2&4&2 \\ 4&-10&2 \\ 1&2&4 \end{bmatrix} , \quad {\bf L} = \begin{bmatrix} 1&0&0 \\ 1/2&1&0 \\ 1/4&1/2&1 \end{bmatrix} , \quad

{\bf U} = \begin{bmatrix} 4&-10&2 \\ 0&9&0 \\ 0&0&7/2 \end{bmatrix} , \quad {\bf P} = \begin{bmatrix} 0&1&0 \\ 1&0&0 \\ 0&0&1 \end{bmatrix} ,

\]

then

\[

{\bf L}{\bf U} = \begin{bmatrix} 4&-10&2 \\ 2&4&1 \\ 1&2&4 \end{bmatrix} , \quad {\bf P}{\bf A} = \begin{bmatrix} 4&-10&2 \\ 2&4&1 \\ 1&2&4 \end{bmatrix} .

\]

Thus, the solution becomes

\[

{\bf x} = \begin{bmatrix} x_1 \\ x_2 \\ x_3 \end{bmatrix} = \begin{bmatrix} 1 \\ -1 \\ 3 \end{bmatrix} .

\]

Algorithm for PLU-factorization: is based on the following formula for upper triangular matrix

\( {\bf U} = \left[ u_{i,j} \right] : \)

\[

u_{i,j} = a_{i,j} - \sum_{k=1}^{i-1} u_{k,j} l_{i.k} ,

\]

with similar formula for

\( {\bf L} = \left[ l_{i,j} \right] . \) To ensure that the algorithm is numerically stable when

\( u_{j,j} \ll 0, \)

a pivoting matrix

P is used to re-order

A so that the largest element of each

column of

A gets shifted to the diagonal of

A. The formula for elements of

L follows:

\[

l_{i,j} = \frac{1}{u_{j,j}} \left( a_{i,j} - \sum_{k=1}^{j-1} u_{k,j} l_{i,k} \right) .

\]

Step 1: If \( n=1 , \) return \( {\bf P} =1, \quad {\bf L} = 1, \quad {\bf U} = a_{1,2} . \)

Step 2: Find a non-zero element \( a_{i,1} \) in the first row of A.

(If not, then the system is not solvable.)

Step 3: Define a permutation matrix \( {\bf Q}_{n \times n} \)

such that

-

\( q_{i,1} = q_{1,i} =1 . \)

-

\( q_{j,j} =1 \) for \( j \ne 1, \ j\ne i . \)

- All the other elements of Q are 0. ■

Step 4: Let \( {\bf B} = {\bf Q} {\bf A} . \)

Note that \( b_{1,1} = a_{i,1} \ne 0. \)

Step 5: Write B as \( {\bf B} =

\begin{bmatrix} b_{1,1} & {\bf r}^{\mathrm T} \\ {\bf c} & {\bf C}_1 \end{bmatrix} . \)

Step 6: Define \( {\bf B}_1 = {\bf C}_1 - {\bf c} \cdot {\bf r}^{\mathrm T} / b_{1,1} .\)

Recursively compute \( {\bf B}_1 = {\bf P}_1 {\bf L}_1 {\bf U}_1 . \)

Step 7:

\[

{\bf P} = {\bf Q}^{\mathrm T} \begin{bmatrix} 1&0 \\ 0& {\bf P}_1 \end{bmatrix} , \qquad

{\bf L} = \begin{bmatrix} 1&0 \\ {\bf P}_1^{\mathrm T} {\bf c}/ b_{1,1} & {\bf L}_1 \\ \end{bmatrix} , \qquad

{\bf U} = \begin{bmatrix} b_{1,1}&{\bf r}^{\mathrm T} \\ 0& {\bf U}_1 \end{bmatrix} . \quad ■

\]

When employing the

complete pivoting strategy we scan for the largest value in the entire submatrix

\( {\bf A}_{k:n,k:n} , \) where

k is the number of the elimination, instead of

just the next subcolumn. This requires

\( O \left( (n-k)^2 \right) \) comparisons for

every step in the elimination to find the pivot. The total cost becomes

\( O \left( n^3 \right) \) comparisons for an

n-by-

n matrix. For an

\( n \times n \) matrix

A, the first step is to scan

n rows and

n columns for the largest value. Once located, this entry is then permuted into the

next diagonal pivot position of the matrix. So in the first step the entry is permuted into the

(1,1)

position of matrix

A. We interchange rows exactly as we did in partial pivoting, by multiplying

A on the left with a permutation matrix

P. Then to interchange columns, we multiply

A on the right by another permutation matrix

Q. The matrix product

PAQ interchanges rows and columns accordingly so that the largest entry in the matrix is in the

(1,1) position of

A. With complete pivoting, the general equation for

L

is the same as for partial pivoting, but the equation for

U is slightly different.

Complete pivoting is theoretically the most stable strategy

as it can be used even when a matrix is singular, but it is much slower than partial pivoting.

Example.

Consider the matrix A:

\[

{\bf A} = \begin{bmatrix} 2&3&4 \\ 4&7&5 \\ 4&9&5 \end{bmatrix} .

\]

In this case, we want to use two different permutation matrices to place the largest entry of the entire matrix

into the position

\( A_{1,1} .\) For the given matrix, this means we want 9 to be the

first entry of the first column. To achieve this we multiply A on the left by the permutation matrix

\( {\bf P}_1 \) and multiply on the right by the permutation matrix

\( {\bf Q}_1 .\)

\[

{\bf P}_1 = \begin{bmatrix} 0&0&1 \\ 0&1&0 \\ 1&0&0 \end{bmatrix} , \quad {\bf Q}_1 = \begin{bmatrix} 0&1&0 \\ 1&0&0 \\ 0&0&1 \end{bmatrix} , \quad

{\bf P}_1 {\bf A} {\bf Q}_1 = \begin{bmatrix} 9&4&5 \\ 7&4&5 \\ 3&2&4 \end{bmatrix} .

\]

Then in computing the LU factorization, the matrix

M is used to eliminate the bottom two

entries of the first column:

\[

{\bf M}_1 = \begin{bmatrix} 1&0&0 \\ -7/9&1&0 \\ -1/3&0&1 \end{bmatrix} \qquad \Longrightarrow \qquad {\bf M}_1 {\bf P}_1 {\bf A} {\bf Q}_1 =

\begin{bmatrix} 9&4&5 \\ 0&8/9&10/9 \\ 0&2/3&7/3 \end{bmatrix} .

\]

Then, the permutation matrices

P and

Q are used to move the largest entry of the submatrix

\( {\bf A}_{2:3;2:3} \)

into the position

\( A_{2,2} : \)

\[

{\bf P}_2 = \begin{bmatrix} 1&0&0 \\ 0&0&1 \\ 0&1&0 \end{bmatrix} = {\bf Q}_2 \qquad \Longrightarrow \qquad {\bf P}_2 {\bf M}_1 {\bf P}_1 {\bf A} {\bf Q}_1 {\bf Q}_2 =

\begin{bmatrix} 9&4&5 \\ 0&7/3&2/3 \\ 0&10/9&8/9 \end{bmatrix} .

\]

As we did before, we are going to use matrix multiplication to eliminate entries at the bottom

of the column 2:

\[

{\bf M}_2 = \begin{bmatrix} 1&0&0 \\ 0&1&0 \\ 0&-10/21&1 \end{bmatrix} \qquad \Longrightarrow \qquad {\bf M}_2 {\bf P}_2 {\bf M}_1 {\bf P}_1 {\bf A} {\bf Q}_1 {\bf Q}_2 =

\begin{bmatrix} 9&4&5 \\ 0&7/3&2/3 \\ 0&0&4/7 \end{bmatrix} .

\]

This upper triangular matrix product is the

U matrix. The

L matrix comes from the inverse of

the product of

M and

P matrices:

\[

{\bf L} = \left( {\bf M}_2 {\bf P}_2 {\bf M}_1 {\bf P}_1 \right)^{-1} =

\begin{bmatrix} 1&0&0 \\ 1/3&1&0 \\ 7/9&10/21&1 \end{bmatrix} .

\]

To check, we can show that

\( {\bf P} {\bf A} {\bf Q} = {\bf L} {\bf U} , \) where

\( {\bf P} = {\bf P}_2 {\bf P}_1 \) and

\( {\bf Q} = {\bf Q}_1 {\bf Q}_2 . \) ■

Rook pivoting is a third alternative pivoting strategy. For this strategy, in the

kth step of the elimination, where \( 1 \le k \le n-1 \) for an

n-by-n matrix A, we scan the submarix \( {\bf A}_{k:n;k:n} \)

for values that are the largest in their respective row and column. Once we have found our potential pivots,

we are free to choose any that we please. This is because the identified potential pivots are large

enough so that there will be a very minimal difference in the end factors, no matter which pivot is

chosen. When a computer uses rook pivoting, it searches only for the first entry that is the largest

in its respective row and column. The computer chooses the first one it finds because even if there

are more, they all will be equally effective as pivots. This allows for some options in our decision

making with regards to the pivot we want to use.

Example.

Consider the matrix A:

\[

{\bf A} = \begin{bmatrix} 1&4&7 \\ 7&8&2 \\ 9&5&1 \end{bmatrix} .

\]

When using the partial pivoting technique, we would identify 8 as our first pivot element. When

using complete pivoting, we would use 9 as our first pivot element. When rook pivoting we identify

entries that are the largest in their respective rows and columns. So following this logic, we identify

8, 9, and 7 all as potential pivots. In this example we will choose 7 as the first pivot. Since we are

choosing 7 for our first pivot element, we multiply

A on the right by the permutation matrix

Q to move 7 into the position (1,1). Note that we will also multiply on the left of

A

by

P, but

P is simply the identity matrix so there will be no effect on

A.

\[

{\bf Q}_1 = \begin{bmatrix} 0&0&1 \\ 0&1&0 \\ 1&0&0 \end{bmatrix} \qquad\Longrightarrow \qquad {\bf P}_1 {\bf A} {\bf Q}_1 =

\begin{bmatrix} 7&4&1 \\ 2&8&7 \\ 1&5&9 \end{bmatrix} .

\]

Then we eliminate the entries in row 2 and 3 of column 1 via the matrix

\[

{\bf M}_1 = \begin{bmatrix} 1&0&0 \\ -2/7&1&0 \\ -1/7&0&1 \end{bmatrix} \qquad\Longrightarrow \qquad {\bf M}_1 {\bf P}_1 {\bf A} {\bf Q}_1 =

\begin{bmatrix} 7&4&1 \\ 0&48/7&47/7 \\ 0&30/7&62/7 \end{bmatrix} .

\]

If we were employing partial pivoting, our pivot would be 48/7 and if we were using complete

pivoting, our pivot would be 62/7. But using the rook pivoting strategy allows us to choose either

48/7 or 62/7 as our next pivot. In this situation, we do not need to permute the matrix

\( {\bf M}_1 {\bf P}_1 {\bf A}\,{\bf Q}_1 \) to

continue with our factorization because one of our potential pivots is already in pivot postion. To

keep things interesting, we will permute the matrix anyway and choose 62/7

to be our next pivot. To achieve this we wil multiply by the matrix

\( {\bf P}_2 \)

on the left and by the matrix

\( {\bf Q}_2 \) on the right.

\[

{\bf P}_2 = \begin{bmatrix} 1&0&0 \\ 0&0&1 \\ 0&1&0 \end{bmatrix} = {\bf Q}_2 \qquad\Longrightarrow \qquad {\bf P}_2 {\bf M}_1 {\bf P}_1 {\bf A} {\bf Q}_1 {\bf Q}_2 =

\begin{bmatrix} 7&1&4 \\ 0&62/7&30/7 \\ 0&47/7&48/7 \end{bmatrix} .

\]

At this point, we want to eliminate the last entry of the second column to complete the factorization. We achieve

this by matrix

\( {\bf M}_2 :\)

\[

{\bf M}_2 = \begin{bmatrix} 1&0&0 \\ 0&0&1 \\ 0&-47/62&1 \end{bmatrix} \qquad\Longrightarrow \qquad {\bf M}_2 {\bf P}_2 {\bf M}_1 {\bf P}_1 {\bf A} {\bf Q}_1 {\bf Q}_2 =

\begin{bmatrix} 7&1&4 \\ 0&62/7&30/7 \\ 0&0&7/2 \end{bmatrix} .

\]

After the elimination the matrix is now upper triangular which we recognize as the factor

U. The factor

L is equal to the matrix product

\( {\bf L} = \left( {\bf M}_2 {\bf P}_2 {\bf M}_1 {\bf P}_1 \right)^{-1} . \) You can use Sage to check that

PAQ = LU, where

\( {\bf P} = {\bf P}_2 {\bf P}_1 \) and

\( {\bf Q} = {\bf Q}_1 {\bf Q}_2 \)

\begin{align*}

{\bf P\,A\,Q} &= \begin{bmatrix} 1&0&0 \\ 0&0&1 \\ 0&1&0 \end{bmatrix} \begin{bmatrix} 1&4&7 \\ 7&8&2 \\ 9&5&1 \end{bmatrix} \begin{bmatrix} 0&1&0 \\ 0&0&1 \\ 1&0&0 \end{bmatrix} \\

&= \begin{bmatrix} 1&0&0 \\ 1/7&1&0 \\ 2/7&47/62&1 \end{bmatrix} \begin{bmatrix} 7&1&4 \\ 0&62/7&30/7 \\ 0&0&7/2 \end{bmatrix} = {\bf L\,U} .

\end{align*}

sage: nsp.is_finite()

False

Reflection

Suppose that we are given a line spanned over the vector

a in

\( \mathbb{R}^n , \) and we need to find a matrix

H

of reflection about the line through the origin in the plane. This matrix

H should fix every vector on line, and

should send any vector not on the line to its mirror image about the line. The subspace

\( {\bf a}^{\perp} \)

is called the hyperplane in

\( \mathbb{R}^n , \) orthogonal to

a. The

\( n \times n \)

orthogonal matrix

\[

{\bf H}_{\bf a} = {\bf I}_n - \frac{2}{{\bf a}^{\mathrm T} {\bf a}} \, {\bf a} \, {\bf a}^{\mathrm T}

= {\bf I}_n - \frac{2}{\| {\bf a} \|^2} \, {\bf a} \, {\bf a}^{\mathrm T}

\]

is called the Householder matrix or the Householder reflection about

a, named in honor of the American mathematician Alston Householder (1904--1993).

The complexity of the Householder algorithm is \( 2mn^2 - (2/3)\, n^3 \) flops

(arithmetic operations). The Householder matrix \( {\bf H}_{\bf a} \) is symmetric,

orthogonal, diagonalizable, and all its eigenvalues are 1's except one which is -1. Moreover, it is idempotent:

\( {\bf H}_{\bf a}^2 = {\bf I} . \) When \( {\bf H}_{\bf a} \) is applied to a vector x,

it reflects x through hyperplane \( \left\{ {\bf z} \, : \, {\bf a}^{\mathrm T} {\bf z} =0 \right\} . \)

Example.

Consider the vector \( {\bf u} = \left[ 1, -2 , 2 \right]^{\mathrm T} . \) Then the

Householder reflection with respect to vector u is

\[

{\bf H}_{\bf u} = {\bf I} - \frac{2}{9} \, {\bf u} \,{\bf u}^{\mathrm T} = \frac{1}{9} \begin{bmatrix} 7&4&-4 \\ 4&1&8 \\ -4&8&1 \end{bmatrix} .

\]

The orthogonal symmetric matrix

\( {\bf H}_{\bf u} \) is indeed a reflection because its eigenvalues are -1,1,1,

and it is idempotent. ■

Theorem: If u and v are distinct vectors in

\( \mathbb{R}^n \) with the same norm, then the Householder reflection about the hyperplane

\( \left( {\bf u} - {\bf v} \right)^{\perp} \) maps u into v and conversely.

■

We check this theorem for two-dimensional vectors \( {\bf u} = \left[ u_1 , u_2 \right]^{\mathrm T} \)

and \( {\bf v} = \left[ v_1 , v_2 \right]^{\mathrm T} \) of the same norm. So given

\( \| {\bf v} \| = \| {\bf u} \| , \) we calculate

\[

\| {\bf u} - {\bf v} \|^2 = \| {\bf u} \|^2 + \| {\bf v} \|^2 - \langle {\bf u}, {\bf v} \rangle - \langle {\bf v} , {\bf u} \rangle =

2 \left( u_1^2 + u_2^2 - u_1 v_1 - u_2 v_2 \right) .

\]

Then we apply the Householder reflection

\( {\bf H}_{{\bf u} - {\bf v}} \) to the vector

u:

\begin{align*}

{\bf H}_{{\bf u} - {\bf v}} {\bf u} &= {\bf u} - \frac{2}{\| {\bf u} - {\bf v} \|^2}

\begin{bmatrix} \left( u_1 - v_1 \right)^2 u_1 + \left( u_1 - v_1 \right) \left( u_2 - v_2 \right) u_2 \\

\left( u_1 - v_1 \right) \left( u_2 - v_2 \right) u_1 + \left( u_2 - v_2 \right)^2 u_2 \end{bmatrix} \\

&= \frac{1}{u_1^2 + u_2^2 - u_1 v_1 - u_2 v_2} \begin{bmatrix} v_1 \left( u_1^2 + u_2^2 - u_1 v_1 - u_2 v_2 \right) \\

v2 \left( u_1^2 + u_2^2 - u_1 v_1 - u_2 v_2 \right) \end{bmatrix} = {\bf v} .

\end{align*}

Similarly,

\( {\bf H}_{{\bf u} - {\bf v}} {\bf v} = {\bf u} . \) ■

Example.

We find a Householder reflection that maps the vector \( {\bf u} = \left[ 1, -2 , 2 \right]^{\mathrm T} \)

into a vector v that has zeroes as its second and third components. Since this vecor has to be of the same norm, we get

\( {\bf v} = \left[ 3, 0 , 0 \right]^{\mathrm T} . \) Since

\( {\bf a} = {\bf u} - {\bf v} = \left[ -2, -2 , 2 \right]^{\mathrm T} , \) the

Householder reflection \( {\bf H}_{{\bf a}} = \frac{1}{3} \begin{bmatrix} 1&-2&2 \\ -2&1&2 \\ 2&2&1 \end{bmatrix} \) maps the vector \( {\bf u} = \left[ 1, -2 , 2 \right]^{\mathrm T} \)

into a vector v. Matrix \( {\bf H}_{{\bf a}} \) is idempotent (\( {\bf H}_{{\bf a}}^2 = {\bf I} \) ) because its eigenvalues are -1, 1, 1. ■

sage: nsp.is_finite()

False

We use the Householder reflection to obtain an alternative version of LU-decomposition. Consider the following m-by-n matrix:

\[

{\bf A} = \begin{bmatrix} a & {\bf v}^{\mathrm T} \\ {\bf u} & {\bf E} \end{bmatrix} ,

\]

where

u and

v are

m-1 and

n-1 vectors, respectively. Let σ be the

2-norm of the first column of

A. That is, let

\[

\sigma = \sqrt{a^2 + {\bf u}^{\mathrm T} {\bf u}} .

\]

Assume that σ is nonzero. Then the vector

u in the matrix A can be annihilated using a

Householder reflection given by

\[

{\bf P} = {\bf I} - \beta {\bf h}\, {\bf h}^{\mathrm T} ,

\]

where

\[

{\bf h} = \begin{bmatrix} 1 \\ {\bf z} \end{bmatrix} , \quad \beta = 1 + \frac{a}{\sigma_a} , \quad {\bf z} = \frac{\bf u}{\beta \sigma_a} , \quad \sigma_a = \mbox{sign}(a) \sigma .

\]

It is helpful in what follows to define the vectors

q and

p as

\[

{\bf q} = {\bf E}^{\mathrm T} {\bf z} \qquad\mbox{and} \qquad {\bf p} = \beta \left( {\bf v} + {\bf q} \right) .

\]

Then

\[

{\bf P}\,{\bf A} = \begin{bmatrix} \alpha & {\bf w}^{\mathrm T} \\ {\bf 0} & {\bf K} \end{bmatrix} ,

\]

where

\[

\alpha = - \sigma_a , \quad {\bf w}^{\mathrm T} = {\bf v}^{\mathrm T} - {\bf p}^{\mathrm T} , \quad {\bf K} = {\bf E} - {\bf z}\,{\bf p}^{\mathrm T} .

\]

The above formulas suggest the following version of Householder transformations that access the entries of the matrix in a row by row manner.

Algorithm (Row-oriented vertion of Householder transformations):

Step 1: Compute \( \sigma_a \) and β

\[

\sigma = \sqrt{a^2 + {\bf u}^{\mathrm T} {\bf u}} , \qquad \sigma_a = \mbox{sign}(a) \,\sigma , \qquad \beta = a + \frac{a}{\sigma_a} .

\]

Step 2: Compute the factor row \( \left( \alpha , {\bf w}^{\mathrm T} \right) : \)

\[

\alpha = - \sigma_a , \qquad {\bf z} = \frac{\bf u}{\beta \sigma_a} .

\]

Compute the appropriate linear combination of the rows of

A (except for the first column) by computing

\[

{\bf q}^{\mathrm T} = {\bf z}^{\mathrm T} {\bf E}, \qquad {\bf p}^{\mathrm T} = \beta \left( {\bf v}^{\mathrm T} + {\bf q}^{\mathrm T} \right) , \qquad

{\bf w}^{\mathrm T} = {\bf v}^{\mathrm T} - {\bf p}^{\mathrm T} .

\]

Step 3: Apply Gaussian elimination using the pivot row and column from step 2:

\[

\begin{bmatrix} 1 & {\bf p}^{\mathrm T} \\ {\bf z} & {\bf E} \end{bmatrix} \, \rightarrow \,

\begin{bmatrix} 1 & {\bf p}^{\mathrm T} \\ {\bf 0} & {\bf K} \end{bmatrix} ,

\]

where

\( {\bf K} = {\bf E} - {\bf z} \,{\bf p} ^{\mathrm T} . \) ■

This formulation of Householder transformations can be regarded as a special kind of Gauss elimination, where the

pivot row is computed from a linear combination of the rows of the matrix, rather than being taken directly from it.

The numerical stability of the process can be seen from the fact that \( |z_i | \le 1 \) and

\( |w_j | \le \beta \le 2 \) for every entry in z and w.

The Householder algorithm is the most widely used algorithm for QR factorization (for instance, qr in MATLAB) because

it is less sensitive to rounding error than Gram--Schmidt algorithm.

Givens rotation

In numerical linear algebra, a Givens rotation is a rotation in the plane spanned by two coordinates axes.

Givens rotations are named after James Wallace Givens, Jr. (1910--1993), who introduced them to numerical analysis

in the 1950s while he was working at Argonne National Laboratory. A Givens rotation is represented by a matrix of the form

\[

G(i,j,\theta ) = \begin{bmatrix} 1& \cdots & 0 &\cdots & 0 & \cdots & 0 \\

\vdots & \ddots & \vdots && \vdots && \vdots \\

0 & \cdots & c & \cdots & -s & \cdots & 0 \\

\vdots && \vdots & \ddots & \vdots & & \vdots \\

0 & \cdots & s & \cdots & c & \cdots & 0 \\

\vdots && \vdots && \vdots & \ddots & \vdots \\

0 & \cdots & 0 & \cdots & 0 & \cdots & 1

\end{bmatrix} ,

\]

where

\( c= \cos\theta \quad\mbox{and} \quad s= \sin \theta \) appear at the intersections

ith and

jth

rows and columns. The product

\( G(i,j, \theta ){\bf x} \) represents a counterclockwise

rotation of the vector

x in the

(i,j) plane of θ radians, hence the name

Givens rotation.

The main use of Givens rotations in numerical linear algebra is to introduce zeroes in vectors or matrices.

This effect can, for example, be employed for computing the QR decomposition of a matrix. One advantage over

Householder transformations is that they can easily be parallelised, and another is that often for very sparse matrices

they have a lower operation count.

When a Givens rotation matrix, \( G(i,j, \theta ) , \) multiplies another matrix, A,

from the left, \( G(i,j, \theta )\, {\bf A} , \) only rows i and j of A are affected.

Thus we restrict attention to the following counterclockwise problem. Given a and b, find

\( c = \cos\theta \quad \mbox{and} \quad s = \sin\theta \) such that

\[

\begin{bmatrix} c&-s \\ s&c \end{bmatrix} \begin{bmatrix} a \\ b \end{bmatrix} = \begin{bmatrix} r \\ 0 \end{bmatrix} ,

\]

where

\( r = \sqrt{a^2 + b^2} \) is the length of the vector [

a,

b].

Explicit calculation of θ is rarely necessary or desirable. Instead we directly seek

c and

s.

An obvious solution would be

\[

c \,\leftarrow \,\frac{a}{r} , \qquad s \,\leftarrow \,-\frac{b}{r} .

\]

However, the computation for

r may overflow or underflow. An alternative formulation avoiding this problem is

implemented as the

hypot function in many programming languages.

Row Space and Column Space

If

A is an

\( m\times n \) matrix, then the subspace of

\( \mathbb{R}^n \)

spanned by the row vectors of

A is called the

row space of

A, and the subspace of

\( \mathbb{R}^m \)

spanned by the column vectors of

A is called the

column space of

A or the

range of the matrix. The row space of

A is the column space of the adjoint matrix

\( {\bf A}^{\ast} = \overline{\bf A}^{\mathrm T} = \overline{{\bf A}^{\mathrm T}} ,\)

which is a subspace of

\( \mathbb{R}^n .\)

Theorem: Elementary row operations do not change the row space of a matrix. ■

Theorem: The row space and the column space of a matrix have the same dimension. ■

Theorem: If a matrix R is in row echelon (Gaussian) form, then the row vectors with the leading coefficients (that are usually chosen as 1's)

form a basis for the row space of R, and the column vectors corresponding to the leading coefficients form a basis for the column space. ■

A Basis for the Range: Let \( L:\,\mathbb{R}^n\, \mapsto \, \mathbb{R}^m \)

is a linear transformation given by \( L({\bf x}) = {\bf A}\,{\bf x} , \) for some

\( m \times n \) matrix A. To find a basis for the range of L, perform the

following steps.

Step 1: Find B, the reduced row echelon form of A.

Step 2: Form the set of those columns of A whose corresponding columns in B have nonzero pivots.

The set of vectors in Step 2 is a basis for the range of L.

■

Example. Find a basis for the row space and for column space of the matrix

\[

{\bf A} = \left[ \begin{array}{cccccc} 1 &-3&4&-2&5&4 \\ 2 &-6&9 &-1&8&2 \\ 2&-6&9 &-1&9&7 \\ -1&3&-4&2&-5&-4 \end{array} \right] .

\]

Since elementary row operations do not change the row space of a matrix, we can find a basis for the row space of

A by finding a basis for the row space

of any row echelon form of

A. Reducing

A to Gaussian form, we obtain

\[

{\bf A} \, \sim \, {\bf R} = \left[ \begin{array}{cccccc} 1 &-3&4&-2&5&4 \\ 0 &0&1 &3&-2&-6 \\ 0&0&0 &0&1&5 \\ 0&0&0&0&0&0 \end{array} \right] .

\]

According to the above theorem, the nonzero row vectors of

R form a basis for the row space of

R and hence form a basis for the row space of

A. These basis vectors are

\begin{align*}

{\bf r}_1 &= \left[ 1, \ -3, \ 4,\ -2, \ 5, \ 4 \right] ,

\\

{\bf r}_2 &= \left[ 0, \ 0, \ 1,\ 3, \ -2, \ -6 \right] ,

\\

{\bf r}_3 &= \left[ 0, \ 0, \ 0,\ 0, \ 1, \ 5 \right]

\end{align*}

Keeping in mind that

A and

R can have different column spaces, we cannot find a basis for the column space of

A directly

from the column vectors of

R. However, we can determing them by identifying the corresponding column vectors of

A that form a basis for the column space.

Since the first, third, and fifth columns of

R contain the leading 1's of the row vectors, we claim that the vectors in these columns form the required basis:

\[

{\bf c}_1 = \left[ \begin{array}{c} 1 \\ 2 \\ 2 \\ -1 \end{array} \right] , \qquad {\bf c}_3 = \left[ \begin{array}{c} 4 \\ 9 \\ 9 \\ -4 \end{array} \right] , \qquad {\bf c}_5 = \left[ \begin{array}{c} 5 \\ 8 \\ 9 \\ -5 \end{array} \right] .

\]

Null Space or Kernel

If

A is an

\( m\times n \) matrix, then the solution space of the homogeneous system of algebraic equations

\( {\bf A}\,{\bf x} = {\bf 0} ,\) which is a subspace of

\( \mathbb{R}^n , \) is called the

null space or

kernel of matrix

A.

It is usually denoted by ker(

A). The null space of the adjoint matrix

\( {\bf A}^{\ast} = \overline{\bf A}^{\mathrm T} \) is

a subspace of

\( \mathbb{R}^m \) and is called the

cokernel of

A and denoted by coker(

A). ■