The Dual of a Dual Space

In several areas of mathematics, isomorphism appears as a very general concept. The word derives from the Greek "iso", meaning “equal”, and "morphosis", meaning “to form” or “to shape.” Informally, an isomorphism is a map that preserves sets and relations among elements. When this map or this correspondence is established with no choices involved, it is called canonical isomorphism.The construction of a dual may be iterated. In other words, we form a dual space \( \displaystyle \left( V^{\ast} \right)^{\ast} \) of the dual space V*; for simplicity of notation, we shall denote it by V**.

We prefer to speak of a function f (not mentioning its variable), keeping f(x) for the image of x under this map. This approach is very convenient when dealing with linear functionals. For example, Dirac's delta function

Note, that the null space of T is ker(T) = {0} due to uniqueness of linear functional. Finally, letting the element v vary in 𝔽-vector space V, we obtain a linear map

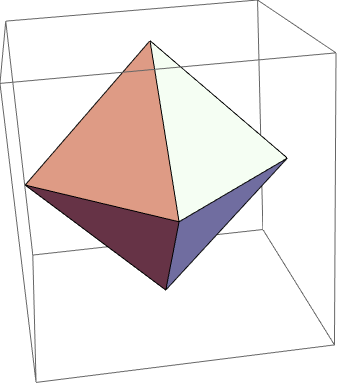

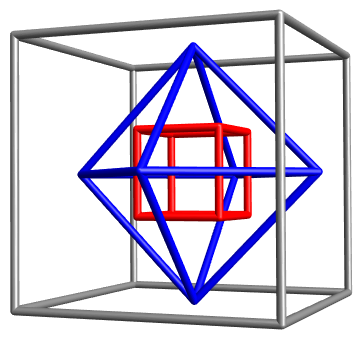

The figure below is a simple example of duality and how one may move easily from one to the other and back. Notice the commonality of six and eight in each figure. Each object has a set of 6 somethings and a set of eight somethings, but they are not the same things. For the cube it is six faces and eight vertices. Count the equivalent for the octahedron. Note the each have the same number of edges. There is a mathematical relation between the cube and the octahedron which permits one to "convert" one shape to the other; reversing that process returns to the original shape. Naturally, there is a scale factor involved, explaining why the outside cube is much larger than the one inside. The mathematical relationship is described above where the center of the faces of one are linked, thereby creating the other.

extractEdges[M_, r_] := MeshPrimitives[M, {1}] /. Line[x___] :> Tube[x, r];

M0 = RegionBoundary[PolyhedronData["Octahedron", "MeshRegion"]];

M1 = dualPolyhedron[M0];

M2 = dualPolyhedron[M1];

M3 = dualPolyhedron[M2];

r = 0.015/2;

Graphics3D[{Gray, Specularity[White, 30],(*extractEdges[M0,r],*) extractEdges[M1, r], Blue, Specularity[White, 30], extractEdges[M2, r], Red, Specularity[White, 30], extractEdges[M3, r]}, Lighting -> "Neutral", Boxed -> False, ViewPoint -> {3.143191699236158, 1.2515098689203545`, -0.06378863415898628}, ViewVertical -> {0.5617332461853343, 0.22780339858369236`, 0.7953372691656077}]

|

|

|

| Vertices (v) | Edges (e) | Faces (f) | f - e + v | |

|---|---|---|---|---|

| tetrahedron | 4 | 6 | 4 | 2 |

| cube | 8 | 12 | 6 | 2 |

| octahedron | 6 | 12 | 8 | 2 |

| dodecahedron | 20 | 30 | 12 | 2 |

| icosahedron | 12 | 30 | 20 | 2 |

The exchange of the numbers of faces (f) and vertices (v)

Since dimV** = dimV* = dimV, the condition ker(T) = {0} implies that T is an invertible transformation (isomorphism). The isomorphism T is very natural because it was defined without using a basis, so it does not depend on the choice of basis. So, informally we say that V** is canonically isomorphic to V; the rigorous statement is that the map T described above (which we consider to be a natural and canonical) is an isomorphism from V to V**.

When we defined the dual space V* from V, we did so by picking a special basis (the dual basis), therefore, the isomorphism from V to V* is not canonical because it depends on the basis. Since the dual space is intuitively the space of “rulers” (or measurement-instruments) of our vector space, and as so there is no canonical relationship between rulers and vectors because measurements need scaling, and there is no canonical way to provide one scaling for space.

On the other hand, the isomorphism \eqref{EqDual.4} between the initial vector space V and its double dual V** is canonical since mapping \eqref{EqDual.4} does not depend on local basis. If we were to calibrate the measure-instruments, a canonical way to accomplish it is to calibrate them by acting on what they are supposed to measure.

There are known two well-known methods to solve this optimization problem: the simplex _algorithm (invented by George Dantzig during the second world war) and Karmarkar’s Method (1984).

If optimal vectors do not exist, there are two possibilities: Either both feasible sets are empty, or one is empty and the other problem is unbounded (the maximum is +∞ or the minimum is −∞).

Application to Economics and Finance

The central question of economics is how to allocate scarce resources amongst alternative ends. This turns into a series of sub-issues:

- How much of what to apply and when?

- How does the application of any particular factor/resource change the output in both absolute and relative terms?

- What is the best combination of resources that produces the most efficient result?

Life involves trade-offs. Constraints force trade-offs into the picture. You can't go to the movie and the ballgame on the same night. One must make choices. A rational life means making choices which optimize your time/happiness/profits/career/retirement savings. The ideas of maximization and minimization become more interesting in the presence of constraints. Mathematica has very highly evolved algorithms for solving these kinds of problems.

The Primal Problem

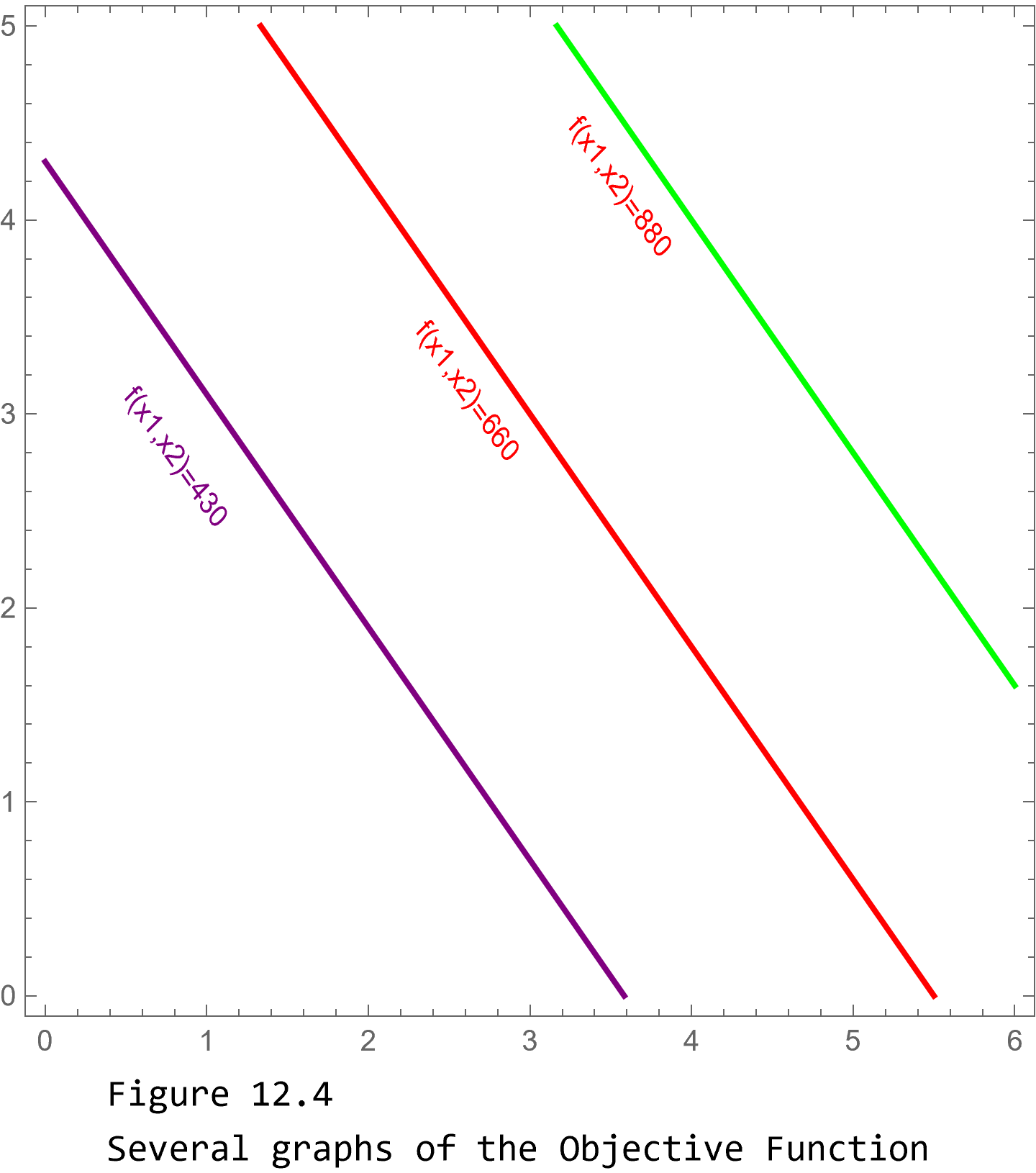

It all begins with stating the goal as an "objective function." This is the mathematical expression of what you wish to achieve. So here we have some x and y (inputs, products, choices) that must be combined in a certain way to get us where we want to be.

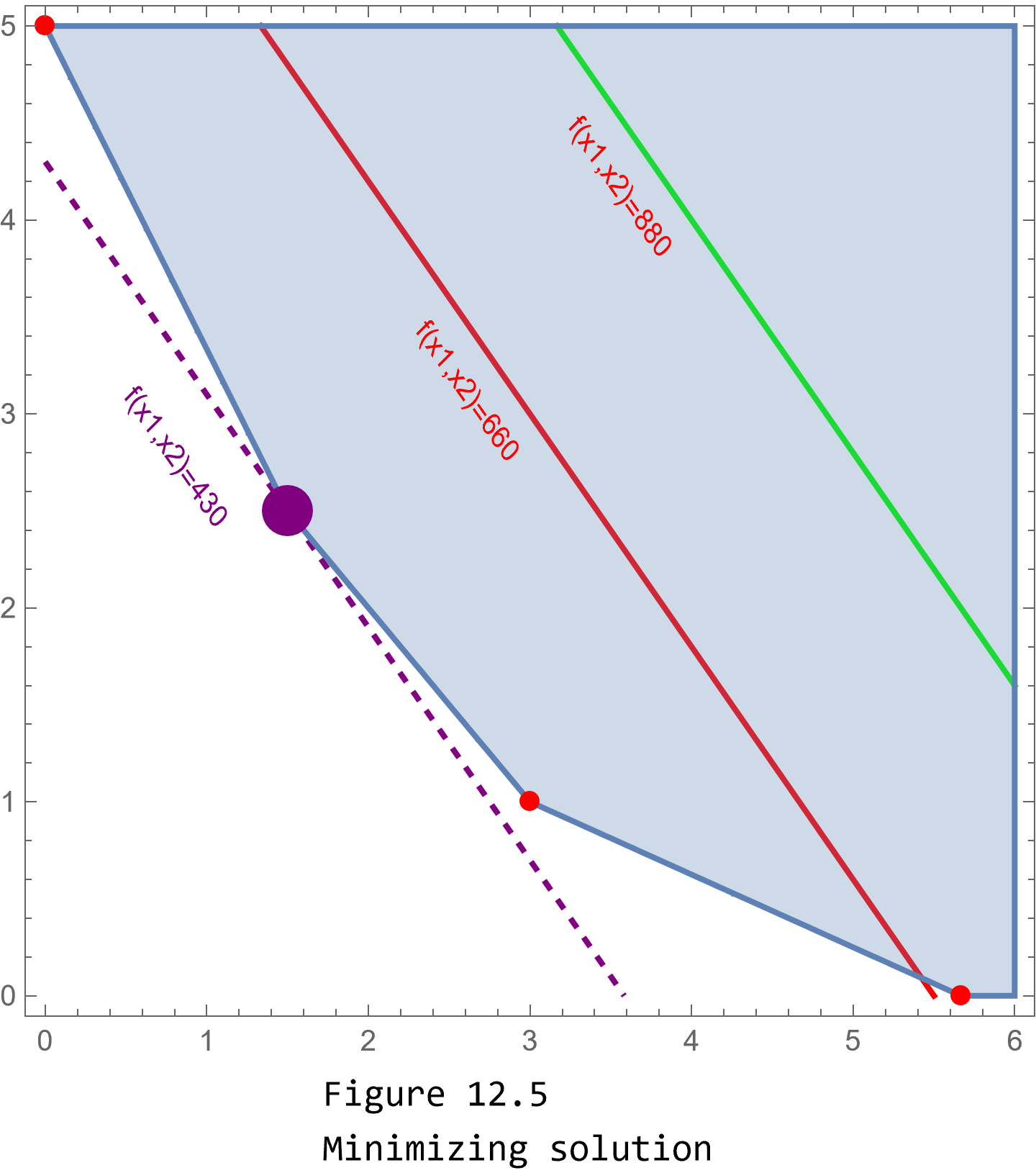

In order to illustrate the concepts, we consider a simple primal minimization problem:

minimize a cost function \( \displaystyle \qquad f(x_1 , x_2 ) = 120\,x_1 + 100\, x_2 \qquad \iff \qquad f({\bf x}) = {\bf c} \bullet {\bf x} , \quad \) where c = (120, 100) and x = (x₁, x₂),

subject to constraints:

f1[x1_, x2_] := 120 x1 + 100 x2;

(*Constraints*)

g1[x1_, x2_] := 2 x1 + 2 x2 >= 8

g2[x1_, x2_] := 5 x1 + 3 x2 >= 15

g3[x1_, x2_] := 3 x1 + 8 x2 >= 17

MatrixForm[A]

MatrixForm[b]

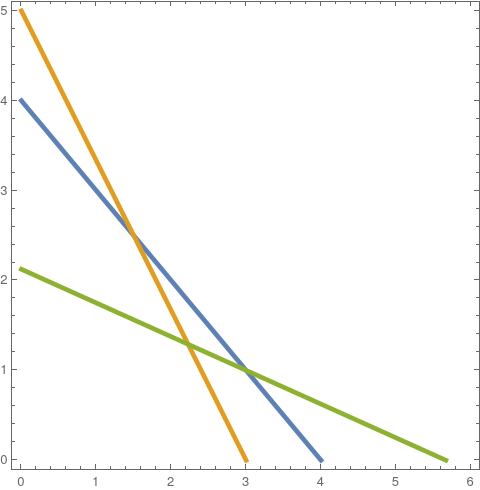

First, we find the solution of the formulated primal minimization problem geometrically, and then solve it analytically. So we plot the constraint functions

|

cntrPlt1 =

ContourPlot[{2 x + 2 y == 8, 5 x + 3 y == 15, 3*x + 8*y == 17}, {x, 0,

6}, {y, 0, 5}, ContourStyle -> Thickness[0.01]];

LLabeled[%, "Figure 12.1: Constraint Lines"]abeled[%, "Figure 12.1: Constraint Lines"] |

|

pt1 = Solve[{2 x1 + 2 x2 == 8, 5 x1 + 3 x2 == 15}, {x1, x2}][[1, All,

2]];

pt2 = Solve[{2 x1 + 2 x2 == 8, 3 x1 + 8 x2 == 17}, {x1, x2}][[1, All,

2]];

pt3 = {Solve[3 x1 + 8 x2 == 17 /. x2 -> 0, x1][[1, 1, 2]], 0};

pt4 = {0, 5};

pt5 = {5, .25};

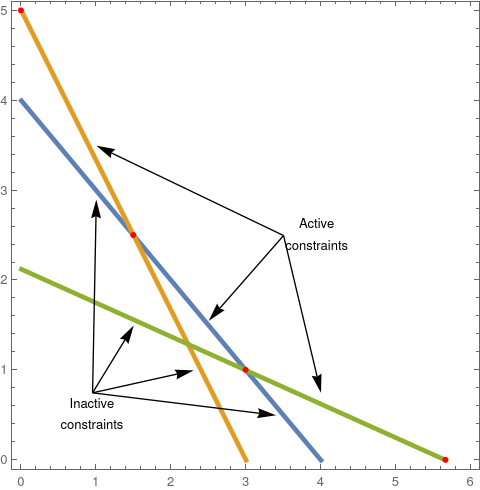

pts1 = Show[

Graphics[{Red, PointSize -> Medium, Point[#]}] & /@ {pt1, pt2, pt3,

pt4}];

arr1 = Graphics[{Arrow[{{3.5, 2.5}, {1, 3.5}}],

Arrow[{{3.5, 2.5}, {2.5, 1.55}}], Arrow[{{3.5, 2.5}, {4, .75}}], ,

Arrow[{{.95, .75}, {1.5, 1.5}}], Arrow[{{.95, .75}, {1, 2.9}}],

Arrow[{{.95, .75}, {2.3, 1}}], Arrow[{{.95, .75}, {3.4, .5}}],

Text["Inactive\nconstraints", {.95, .5}],

Text["Active\nconstraints", {3.95, 2.5}]}];

sh1 = Labeled[Show[cntrPlt1, pts1, arr1],

"Figure 12.2: Active Constraint Lines"]

|

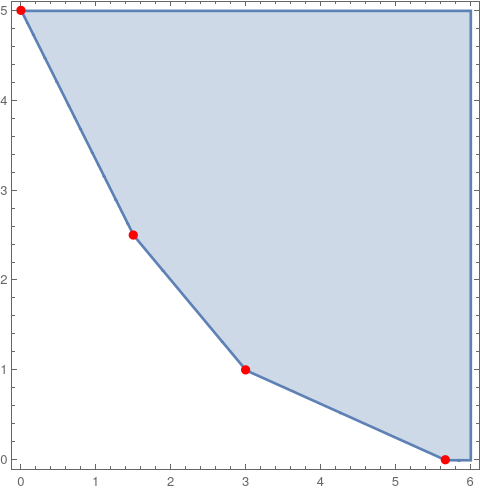

This allows us to plot the feasible region that displays the area bounded by all of the constraints. Also shown are four red points that represent so-called "corner solutions" where optimal values may be found. Note below that we have added two more constraints. Both x₁ and x₂ must be non-negative. This example is considered "stylized" in that is simplifies a context for teaching purposes.

|

regPlt1 =

RegionPlot[{2 x1 + 2 x2 >= 8 && 5 x1 + 3 x2 >= 15 &&

3*x1 + 8*x2 >= 17 && x1 >= 0 && x2 >= 0}, {x1, 0, 6}, {x2, 0, 5}];

Labeled[Show[%, pts1], "Figure 12.3: Feasible Region"]

|

Mathematica has several ways to find the minimum of this function, here are two:

f1[x_, y_] := 120 x + 100 y;

g1[x1_, x2_] := 2 x1 + 2 x2 >= 8;

g2[x1_, x2_] := 5 x1 + 3 x2 >= 15;

g3[x1_, x2_] = 3*x1 + 8*x2 >= 17;

FindMinimum[{f1[x1, x2], g1[x1, x2], g2[x1, x2], g3[x1,x2], x1 >= 0, x2 >= 0}, {x1, x2}]

LinearOptimization command:

|

|

The Dual Problem

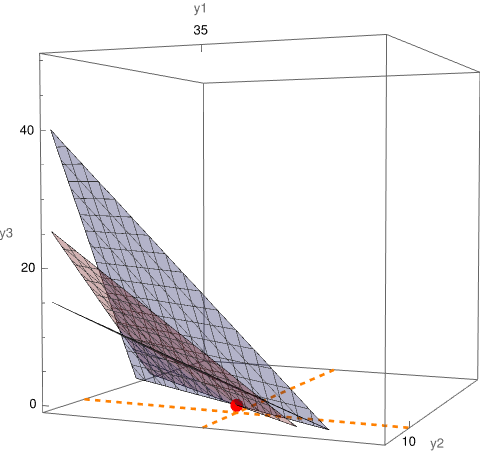

Now we formulate the dual problem: maximize the objective function f(y₁, y₂, y₃) = 8 y₁ + 15 y₂ + 17 y₃ (note this is a function of three variables) subject to only two constraints:

f2[x_, y_, z_] := 8 x + 15 y + 17 z;

gg1[y1_, y2_, y3_] := 2 y1 + 5 y2 + 3 y3 <= 120;

gg2[y1_, y2_, y3_] := 2 y1 + 3 y2 + 8 y3 <= 100;

FindMaximum[{f2[y1, y2, y3], gg1[y1, y2, y3], gg2[y1, y2, y3], y1 >= 0, y2 >= 0, y3 >= 0}, {y1, y2, y3}]

MatrixForm[bb]

MatrixForm[y]

|

pt6 = Graphics3D[{Red, PointSize[.03], Point[{y1, y2, y3}]} /.

soln3[[2]]];

cntrPlt3 =

ContourPlot3D[{8 *y1 + 15*y2 + 17*y3 == 430,

2*y1 + 5*y2 + 3*y3 == 120, 2*y1 + 3*y2 + 8*y3 == 120}, {y1, 0,

70}, {y2, 0, 50}, {y3, 0, 50}, AxesLabel -> {"y1", "y2", "y3"},

Ticks -> {{35}, {10}, Automatic},

FaceGrids -> {{{0, 0, -1}, {{35}, {10}}}},

FaceGridsStyle -> Directive[Orange, Thick, Dashed],

PlotLegends -> {"Obj Fct", "constraint-1",

"constraint-2\n(because of viewpoint\nshows as only a line)"},

ViewPoint -> {1.3244723188775946`, -3.1106692576486226`,

0.13967765049131722`},

ContourStyle -> {{Red, Opacity[0.3]}, {Blue, Opacity[0.3]}, {Green,

Opacity[0.3]}}];

Labeled[Show[cntrPlt3,

pt6], "Figure 12.6: Optimum point (red), Objective function\nand \

Constraint planes"]

|

|

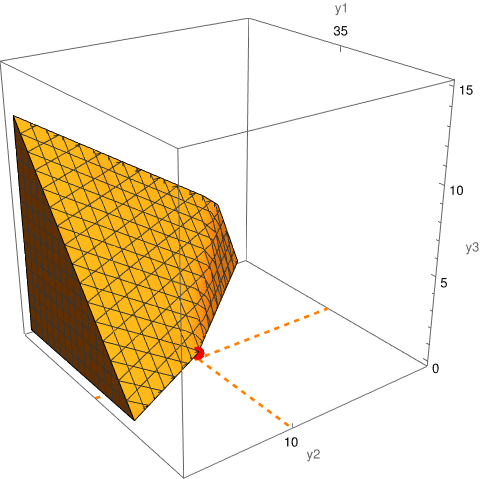

regPlt2 =

RegionPlot3D[{2 y1 + 5 y2 + 3 y3 <= 120 &&

2 y1 + 3 y2 + 8 y3 <= 100 && y1 >= 0 && y2 >= 0 && y3 >= 0}, {y1,

0, 70}, {y2, 0, 25}, {y3, 0, 15},

AxesLabel -> {"y1", "y2", "y3"}, Ticks -> {{35}, {10}, Automatic},

FaceGrids -> {{{0, 0, -1}, {{35}, {10}}}},

FaceGridsStyle -> Directive[Orange, Thick, Dashed],

ViewPoint -> {2.4853814757635058`, -1.8372343399481694`,

1.3774791831627913`}];

Labeled[Show[regPlt2,

pt6], "Figure 12.7: Optimum point (red) at edge of feasible region"]

|

Reconciling the two

- Axler, Sheldon Jay (2015). Linear Algebra Done Right (3rd ed.). Springer. ISBN 978-3-319-11079-0.

- Halmos, Paul Richard (1974) [1958]. Finite-Dimensional Vector Spaces (2nd ed.). Springer. ISBN 0-387-90093-4.

- Katznelson, Yitzhak; Katznelson, Yonatan R. (2008). A (Terse) Introduction to Linear Algebra. American Mathematical Society. ISBN 978-0-8218-4419-9.

- Treil, S., Linear Algebra Done Wrong.

- Wikipedia, Dual space/