Left and Right Inverse matrices

Theoretical Issues regarding Inverse Matrices

Now we answer a natural question how to find an inverse matrix. We consider some theoretical results developed by mathematical community that have mostly theoretical importance for this issue. Practical computation of matrix inverses is based on Gauss--Jordan elimination procedure that is covered in a special section.

We start our theoretical journey with cofactor method that requires another definition of minor introduced by the English mathematician James Sylvester in 1850.

We consider a nonsingular 4 × 4 matrix \[ {\bf A} = \begin{bmatrix} -2& \phantom{-}1& -1& \phantom{-}2 \\ -1& \phantom{-}2& -1& \phantom{-}1 \\ \phantom{-}2& -4& \phantom{-}1& -1 \\ \phantom{-}3& \phantom{-}2& \phantom{-}1& -2 \end{bmatrix} . \] Although Mathematica has a dedicated command to calculate the adjugate matrix, we prefer to show all steps in its computation. However, you must be prepared for bewildering, tedious complexity. Calculating the inverse of a 4x4 matrix is a bit laborious but let's break it down into steps. The steps to calculate the inverse of a matrix are as follows:

- Calculate the determinant of the matrix. If the determinant is 0, the matrix does not have an inverse.

- Calculate the matrix of minors.

- Co-factor the matrix of minors.

- Transpose the co-factor matrix.

- Divide each element of the transposed matrix by the determinant.

A = {{-2, 1, -1, 2}, {-1, 2, -1, 1}, {2, -4, 1, -1}, {3, 2, 1, -2}}

2. Calculate the matrix of minors---it is of size 4 × 4. For each element in the matrix, remove the row and column it's in. The determinant of the resulting 3 × 3 matrix is the minor of that element.

For this exercise to be consistent we need to establish a notation convention. Let's define a symbolic 4 x 4 matrix, M:

Symbolize[ParsedBoxWrapper[SubscriptBox["_", "_"]]]; mSub = Table[Subscript[Symbol["m"], i, j], {i, 4}, {j, 4}]

Having already checked the Determinant, we move to step 2: Calculate the matrix of minors. Each of the 16 cells in the original matrix, A, will have its element replaced by a particular Minor. To find that number, for each element in the matrix, remove the row and column it's in. The determinant of the resulting, smaller, matrix is the minor for that element.

Although Mathematica automatically computes the determinants of each element's minor and places those determinants in a matrix, we perform these operations manually. Once done, you will never want to do it again and you will appreciate use of a computer algebra system.

We need to create a 3x3 "matrix of minors". Imagine if, for each cell, you ignore the other values in the column and row for that cell you would have then remaining a smaller 3x3 matrix:A11 = A1[[All, 2 ;; 4]]

| 🟥 | |||

| 2 | -1 | 1 | |

| -4 | 1 | -1 | |

| 2 | 1 | -2 |

The second Minor (Subscript[A, 1,2]) is evaluated as folows. Next, we eliminate the first row and second column from matrix A.

| 🟥 | |||

| -1 | -1 | 1 | |

| 2 | 1 | -1 | |

| 3 | 1 | -2 |

The corresponding matrix \[ {\bf A}_{12} = \begin{bmatrix} -1&-1&\phantom{-}1 \\ \phantom{-}2&\phantom{-}1&-1 \\ \phantom{-}3&\phantom{-}1&-2 \end{bmatrix} , \qquad \det {\bf A}_{12} = -1 , \] has determinant

A1t = Transpose[A1];

A12 = Transpose[{A1t[[1]], A1t[[3]], A1t[[4]]}];

Det[A12]

| 🟥 | |||

| -1 | 2 | 1 | |

| 2 | -4 | -1 | |

| 3 | 2 | -2 |

Det[A13]

| 🟥 | |||

| -1 | 2 | -1 | |

| 2 | -4 | 1 | |

| 3 | 2 | 1 |

Det[A14]

Now we work out the second row.

| 1 | -1 | 2 | 🟥 |

| -4 | 1 | -1 | |

| 2 | 1 | -2 |

A21 = A2[[All, 2 ;; 4]]

Minor for (2, 2)-cell is

| -2 | -1 | 2 | |

| 🟥 | |||

| 2 | 1 | -1 | |

| 3 | 1 | -2 |

A2t = Transpose[A2];

A22 = Transpose[{A2t[[1]], A2t[[3]], A2t[[4]]}];

Det[A22]

Similarly, we have

| -2 | 1 | 2 | |

| 🟥 | |||

| 2 | -4 | -1 | |

| 3 | 2 | -2 |

A2t = Transpose[A2];

A23 = Transpose[{A2t[[1]], A2t[[2]], A2t[[4]]}];

Det[A23]

| -2 | 1 | -1 | |

| 🟥 | |||

| 2 | -4 | 1 | |

| 3 | 2 | 1 |

A2t = Transpose[A2];

A24 = Transpose[{A2t[[1]], A2t[[2]], A2t[[3]]}];

Det[A24]

Reaching the halfway point, we can again check against Mathematica's built in function at this stage. First, let's assemble our individual minors.

| 1 | -1 | 2 | |

| 2 | -1 | 1 | |

| 🟥 | |||

| 2 | 1 | -2 |

| -2 | -1 | 2 | |

| -1 | -1 | 1 | |

| 🟥 | |||

| 3 | 1 | -2 |

| -2 | 1 | 2 | |

| -1 | 2 | 1 | |

| 🟥 | |||

| 3 | 2 | -2 |

| -2 | 1 | -1 | |

| -1 | 2 | -1 | |

| 🟥 | |||

| 3 | 2 | 1 |

| 1 | -1 | 2 | |

| -4 | 1 | -1 | |

| 2 | 1 | -2 | |

| 🟥 |

| -2 | -1 | 2 | |

| -1 | -1 | 1 | |

| 2 | 1 | -1 | |

| 🟥 |

| -2 | 1 | 2 | |

| -1 | 2 | 1 | |

| 2 | -4 | -1 | |

| 🟥 |

| -2 | 1 | -1 | |

| -1 | 2 | -1 | |

| 2 | -4 | 1 | |

| 🟥 |

Finally, we are now in a position to make the matrix of minors for our original matrix A based on the names we have given each one. We than can compare it with the built-in Mathematica code for Minors[ ]:

With some machinations we can force Mathematica to agree with us!

3. Co-factor the matrix of minors. Apply a checkerboard of minuses to the "Matrix of Minors". Starting with a plus for the top left, alternate plus and minus through the matrix.

4. Transpose the co-factor matrix. Swap the elements of the rows and columns.

\[ \mbox{Co-factors}^{\mathrm T} = \begin{bmatrix} 2&\phantom{-}3& \phantom{-}3&2 \\ 1&-1&-1 &1 \\ 8&-13&-3&3 \\ 8& -3&\phantom{-}2&3 \end{bmatrix} . \]5. Divide each element of the transposed matrix by the determinant. This will result in the inverse of the original matrix.

The formula for A−1 in the following theorem first appeared in a more general form in Arthur Cayley's Memoir on the Theory of Matrices.

Theorem 10: (A. Cayley, 1858) The inverse of a 2-by-2 matrix is given by the formula: \[ \begin{bmatrix} a&b \\ c&d \end{bmatrix}^{-1} = \frac{1}{ad-bc} \begin{bmatrix} \phantom{-}d&-b \\ -c&\phantom{-}a \end{bmatrix} , \qquad ad \be bc . \]

Theorem 11: The inverse of a square matrix A is the transpose of the cofactor matrix times the reciprocal of the determinant of A:

Algorithm for calculating inverse matrix using Theorem 11: The inverse of a square matrix A is obtained by performimh four steps:

-

Calculating the matrix of Minors; namely, for each entry of the matrix A:

- ignore the values on the current row and column,

- calculate the determinant of the matrix built from the remaining values.

- Then turn matrix of Minors into the matrix of Cofactors. This means that you need to multiply every (i, j)-entry of the matrix of Minors by (−1)i + j.

- Calculate the Adjugate to A, denoted as Adj(A). This requires to apply transposition to the matrix of Cofactors.

- Multiply the Adjugate matrix by the reciprocal of the determinant of A.

eq2 = a*beta + b*delta == 0

eq3 = c*alpha + d*gamma == 0

eq4 = c*beta + d*delta == 1

Solve[{eq1, eq2, eq3, eq4}, {alpha, beta, gamma, delta}]

K = {{p,q,r}, {u,v,w}, {x,y,z}};

P = A.K;

Solns = Simplify[Solve[{P == IdentityMatrix[3]}, {p,q,r,u,v,w,x,y,z}]]

The cofactor matrix can be computed in the Wolfram language using

(-1)^(i+j) Det[Drop[Transpose[

Drop[Transpose[m], {j}]], {i} ]]

Map[Reverse, Minors[m], {0, 1}]

CofactorMatrix[m_List?MatrixQ] :=

MapIndexed[#1 (-1)^(Plus @@ #2)&, MinorMatrix[m],{2}]

Corollary 2: A square matrix A is invertible (nonsingular) if and only if its determinant det(A) ≠ 0.

Det[A]

Now we compute the inverse matrix based on our knowledge how to solve systems of linear equations! If A is an n × n nonsingular matrix, then for any column vector b, which is n × 1 matrix, the linear system A x = b has a unique solution. Partition the identity matrix In into its columns that we denote as \( {\bf e}_i , \ i=1,2,\ldots , n . \) Since the identity matrix can be represented as an array of column-vectors \( {\bf I}_n = \left[ {\bf e}_1 \ {\bf e}_2 \ \cdots \ {\bf e}_n \right] , \) every linear equation \( {\bf A}\,{\bf x} = {\bf e}_i \) has a unique solution for each i. Hence, we have

The inverse matrix can be determined using the resolvent method (see Chapter 8 in Dobrushkin's book for detail), which is based on the formula:

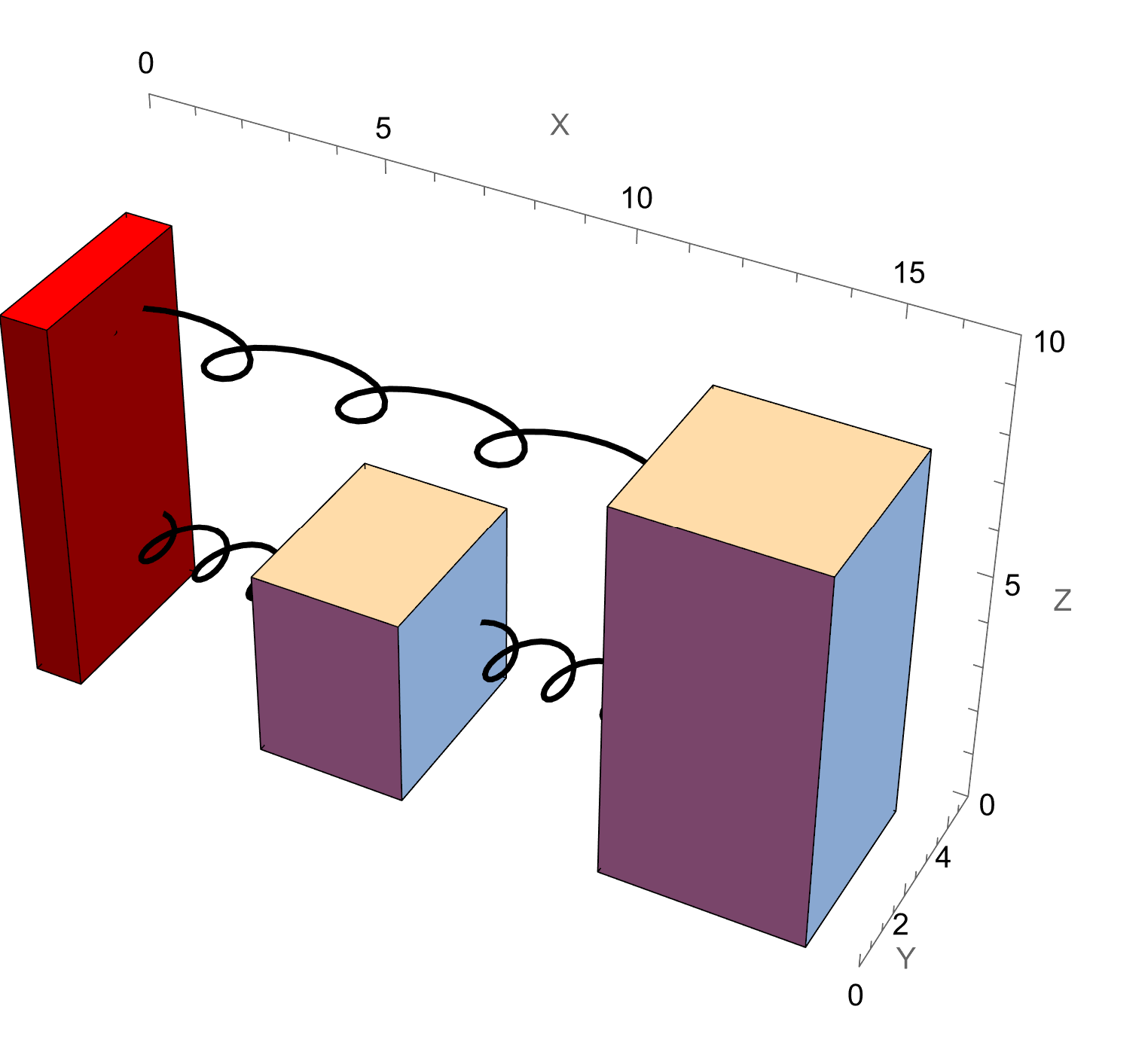

mass1 = Graphics[{Orange, Rectangle[{1, 0}, {1.5, 0.5}]}, AspectRatio -> 1] ;

mass2 = Graphics[{Pink, Rectangle[{3.0, 0}, {3.5, 2.0}]}, AspectRatio -> 1] ;

|

|

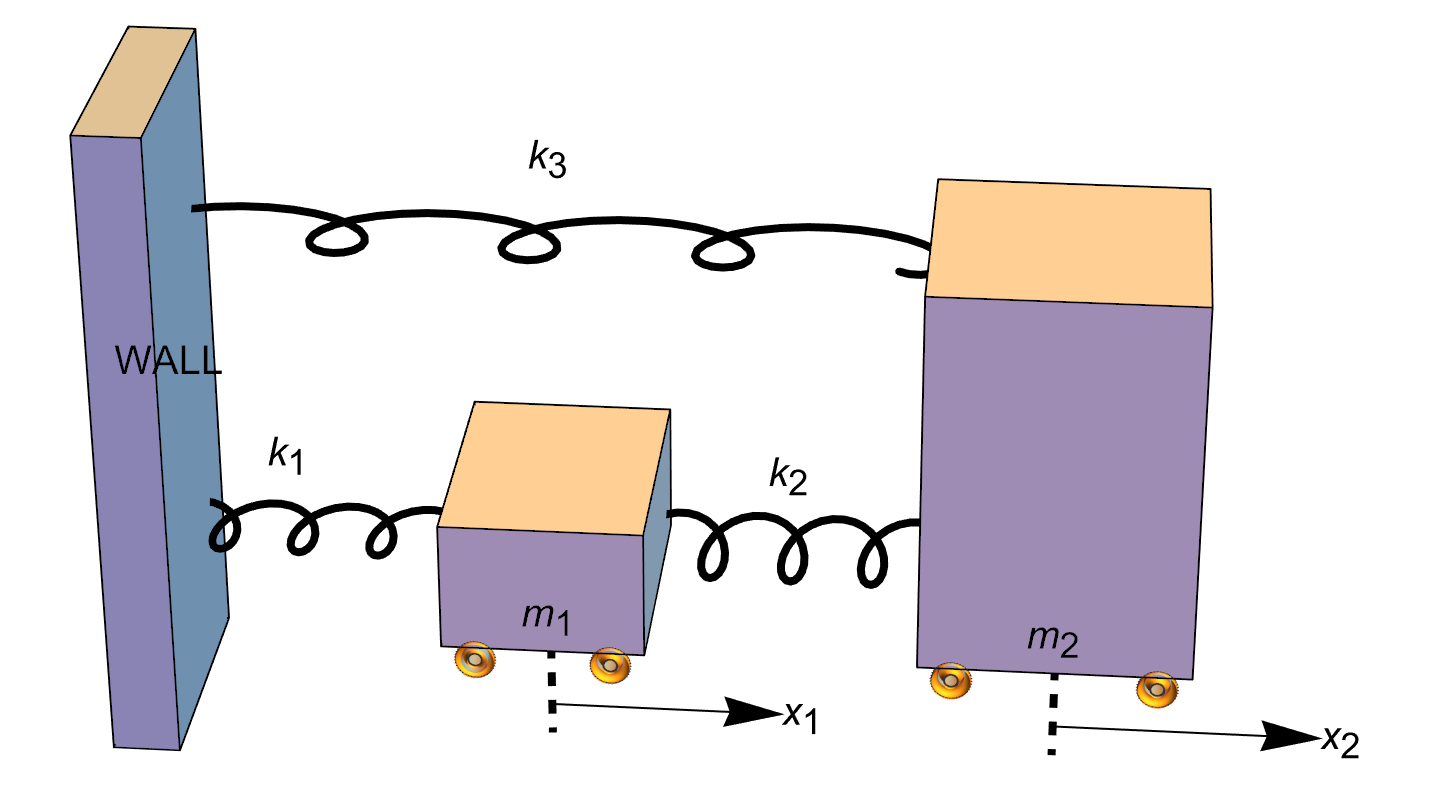

According to Hooke’s Law, the force F required to stretch or compress a spring with spring constant k by a distance x is given by \[ F = k\,x . \] Taking into account the direction of force applied to each mass by the spring, we can write an equation for the force acting on each spring. The mass m₁ is being acted upon by two springs with spring constants k₁ and k₂. A displacement of x₁ by mass m₁ will cause the spring k₁ to stretch or compress depending on if x₁ is positive or negative. In either case, by Hooke’s Law, the force acting upon mass m₁ by spring k₁ is \[ - k_1 x_2 . \] We needed to include the negative sign as if x₁ were positive, then the force will need to be negative as the spring will be trying to pull the mass back.

How much spring k₂ will be stretched or compressed depends on displacements x₁ and x₂. In particular, it will be stretched by x₂ − x₂. Hence, the force acting on mass m₁ by spring k₂ is \[ k_2 \left( x_2 - x_1 \right) . \] For the system to be in equilibrium, the sum of the forces acting on mass m₁ must equal 0. Thus, we have \[ f_1 - k_1 x_1 + k_2 \left( x_2 - x_1 \right) = 0 . \]

Mass m₂ is being acted upon by springs k₂ and k₃. Using similar analysis, we find that \[ f_2 - k_3 x_2 -k_2 \left( x_2 - x_1 \right) = 0 . \] Rearranging this equations gives \[ \begin{split} k_1 x_1 + k_2 \left( x_1 - x_2 \right) &= f_1 , \\ k_2 \left( x_2 - x_1 \right) + k_3 x_2 &= f_1 . \end{split} \]

We want to find the forces f₁ and f₂ acting on the masses m₁ and m₂ given displacements x₁ and x₂. By Hooke’s Law, we find that the forces f₁ and f₂ satisfy Using matrix/vector multiplication, we can rewrite this system as \[ {\bf K}\,{\bf x} = {\bf f}, \qquad {\bf K} = \begin{bmatrix} k_1 + k_2 & - k_2 \\ -k_2 & k_2 + k_3 \end{bmatrix}, \quad {\bf x} = \begin{bmatrix} x_1 \\ x_2 \end{bmatrix}, \quad {\bf f} = \begin{bmatrix} f_1 \\ f_2 \end{bmatrix} . \] Matrix K is known as the stiffness matrix of the system. In order to find displacements, we multiply both sides of the latter equation by K−1 to obtain \[ {\bf K}^{-1} = \frac{1}{k_1 k_2 + k_1 k_3 + k_2 k_3} \begin{bmatrix} k_2 + k_3 & k_2 \\ k_2 & k_1 + k_2 \end{bmatrix} . \]

- Anton, Howard (2005), Elementary Linear Algebra (Applications Version) (9th ed.), Wiley International

- Beezer, R.A., A First Course in Linear Algebra, 2017.

- Dobrushkin, V.A., Applied Differential Equations. The Primary Course, second edition, CRC Press2022.

- Fadeev--LeVerrier algorithm, Wikipedia.

- Frame, J.S., A simple recursion formula for inverting a matrix, Bulletin of the American Mathematical Society, 1949, Vol. 55, p. 1045. doi:10.1090/S0002-9904-1949-09310-2

- Greenspan, D., Methods of matrix inversion, The American mathematical Monthly, 1955, Vol. 62, No. pp. 303--318.

- Karlsson, L., Computing explicit matrix inverses by recursion, Master's Thesis in Computing Science, 2006.

- Lightstone, A.H., Two methods of inverting matrices, Mathematics Magazine, 1968, Vol. 41, No. 1, pp. 1--7.