The Wolfram Mathematic notebook which contains the code that produces all the Mathematica output in this web page may be downloaded at this link. Caution: This notebook will evaluate, cell-by-cell, sequentially, from top to bottom. However, due to re-use of variable names in later evaluations, once subsequent code is evaluated prior code may not render properly. Returning to and re-evaluating the first Clear[ ] expression above the expression no longer working and evaluating from that point through to the expression solves this problem.

Remove[ "Global`*"] // Quiet (* remove all variables *)

Linear algebra is primarily concerned with two types of mathematical objects: vectors and their transformations or matrices. Moreover, these objects are common in all branches of science related to physics, engineering, economics, and through computer technologies to almost all branches of science. Since matrices are build from vectors, this section focuses on the latter by presenting basic vector terminology and corresponding concepts. Fortunately, we have proper symbols for their computer manipulations.

There are two approaches in science literature to define vectors---one is abstract, especially those on linear algebra texts (you will meet it in part 3 of this tutorial), another trend involving engineering and physics topics is based on geometrical interpretation or coordinates definition, caring sequences of numbers. Computer science folks sit on both chairs because they utilize both interpretations of vectors. Moreover, they introduced abstract analogues of vectors---lists and arrays are widely used in mathematical books. We all observe a shift in science made by computer science including impact on classical mathematics.

Vectors

Mathematicians distinguish between vector and scalar (pronounced “SKAY-lur”) quantities. You’re already familiar with scalars—scalar is the technical term for an ordinary number. In this course, we use four specific sets of scalars: integers ℕ, rational numbers ℚ, real numbers ℝ, and complex numbers ℂ. We abbreviate these four sets with symbol &Fopf'. By a vector we mean a list or finite sequence of numbers. We employ scalars when we wish to emphasize that a particular quantity is not a vector quantity. For example, as we will discuss shortly, “velocity” and “displacement” are vector quantities, whereas “speed” and “distance” are scalar quantities.

In this section, we focus on algebraic definition of vectors as arrays of numbers and put its geometrical interpretation on back burner (Part 3).

It wasn’t until the early 1600s that thermometry began to come into its own. The famous Italian astronomer and physicist Galileo Galilei (1564--1642), or possibly his friend the physician Santorio, likely came up with an improved thermoscope around 1593: An inverted glass tube placed in a bowl full of water or wine. Santorio apparently used a device like this to test whether his patients had fevers. Shortly after the turn of the 17th century, English physician Robert Fludd also experimented with open-air wine thermometers.

The first recorded instance of anyone thinking to create a universal scale for thermoscopes was in the early 1700s. In fact, two people had this idea at about the same time. One was a Danish astronomer named Ole Christensen Rømer, who had the idea to select two reference points—the boiling point of water and the freezing point of a saltwater mixture, both of which were relatively easy to recreate in different labs—and then divide the space between those two points into 60 evenly spaced degrees. The other was England’s revolutionary physicist and mathematician Isaac Newton, who announced his own temperature scale, in which 0 was the freezing point of water and 12 was the temperature of a healthy human body, the same year that Rømer did. (Newton likely developed this admittedly limited scale to help himself determine the boiling points of metals, whose temperatures would be far higher than 12 degrees.)

After a visit to Rømer in Copenhagen, the Dutch-Polish physicist Daniel Fahrenheit (1686--1736) was apparently inspired to create his own scale, which he unveiled in 1724. His scale was more fine-grained than Rømer’s, with about four times the number of degrees between water’s boiling and freezing points. Fahrenheit is also credited as the first to use mercury inside his thermometers instead of wine or water. Though we are now fully aware of its toxic properties, mercury is an excellent liquid for indicating changes in temperature.

Originally, Fahrenheit set 0 degrees as the freezing point of a solution of salt water and 96 as the temperature of the human body. But the fixed points were changed so that they would be easier to recreate in different laboratories, with the freezing point of water set at 32 degrees and its boiling point becoming 212 degrees at sea level and standard atmospheric pressure.

But this was far from the end of the development of important temperature scales. In the 1730s, two French scientists, Rene Antoine Ferchault de Réamur (1683--1757) and Joseph-Nicolas Delisle (1688--1768), each invented their own scales. Réamur’s set the freezing point of water at 0 degrees and the boiling point of water at 80 degrees, convenient for meteorological use, while Delisle chose to set his scale “backwards,” with water’s boiling point at 0 degrees and 150 degrees (added later by a colleague) as water’s freezing point.

A decade later, Swedish astronomer Anders Celsius (1701--1744) created in 1742 his eponymous scale, with water’s freezing and boiling points separated by 100 degrees—though, like Delisle, he also originally set them “backwards,” with the boiling point at 0 degrees and the ice point at 100. (The points were swapped after his death.) In 1745, Carolus Linnaeus (1707--1778) of Uppsala, Sweden, suggested that things would be simpler if we made the scale range from 0 (at the freezing point of water) to 100 (water’s boiling point), and called this scale the centigrade scale. (This scale was later abandoned in favor of the Celsius scale, which is technically different from centigrade in subtle ways that are not important here.) Notice that all of these scales are relative—they are based on the freezing point of water, which is an arbitrary (but highly practical) reference point. A temperature reading of x°C basically means “x degrees hotter than the temperature at which water freezes.”

Then, in the middle of the 19th century, British physicist William Lord Kelvin (1824--1907) also became interested in the idea of “infinite cold” and made attempts to calculate it. In 1848, he published a paper, On an Absolute Thermometric Scale, that stated that this absolute zero was, in fact, -273 degrees Celsius. (It is now set at -273.15 degrees Celsius.)

Loudness:

Loudness is usually measured in decibels (abbreviated dB). To be more precise, decibels are used to measure the ratio of two power lev- els. If we have two power levels P₁ and P₂, then the difference in decibels between the two power levels is \[ 10\,\log_{10} \left( \frac{P_2}{P_1 \right) \ \mbox{dB} . \] So, if P₂ is about twice the level of P₁, then the difference is about 3 dB. Notice that this is a relative system, providing a precise way to measure the relative strength of two power levels, but not a way to assign a number to one power level. ■Vectors and Points

The dimension of a vector tells how many numbers the vector contains. Vectors may be of any positive dimension, including one. In fact, a scalar can be considered a one dimensional (1D for short) vector. When writing a vector, mathematicians list the numbers surrounded by square brackets or parentheses; there are two common representations of vectors either as columns or as rows---we use both of them. Entries in a vector are usually separated by commas or just spaces. In either case, a vector written horizontally is called a row vector. Vectors are also frequently written vertically---they are called column vectors.

When studying matrices, you will see that vectors can also be represented by diagonal matrices. However, we concentrate our attention on these three types of vector representations as points or n-tuples, row vectors, and column vectors. For example, the following tree vectors can be treated differently by distinct computer solvers:

Actually, row and column vectors are stored in computer memory as 1 × n and n × 1 matrices, respectively. Therefore, we denote the set of all n row vectors as 𝔽1×n or 𝔽1,n and the set of all column vectors as 𝔽n×1 or 𝔽n,1.

As you know, a point has a location but no real size or thickness. In order to identify position of a point, we need to establish a global frame relative to which we specify location of a point. However, an “absolute” position does not exist. Every attempt to describe a position requires that we describe it relative to something else. Any description of a position is meaningful only in the context of some (typically “larger”) reference frame. Theoretically, we could establish a reference frame encompassing everything in existence and select a point to be the “origin” of this space, thus defining the “absolute” coordinate space. Luckily for us, absolute positions in the universe aren’t important. Do you know your precise position in the universe right now?

The term “Cartesian” is just a fancy word for “rectangular.” If you have ever played chess, you have some exposure to two dimensional (2D) Cartesian coordinate spaces.

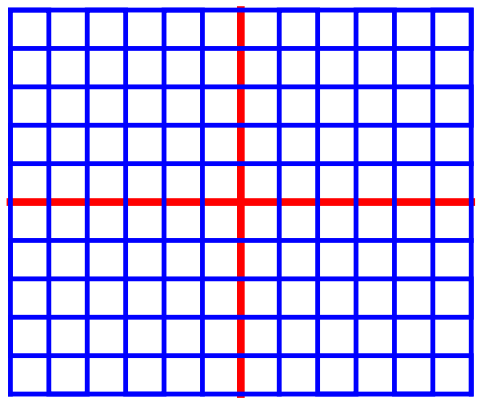

Let’s imagine a fictional city named Cartesia. When the Cartesia city planners were laying out the streets, they were very particular, as illustrated in the map of Cartesia in Figure 1. As you can see from the map, Center Avenue runs east-west through the middle of town. All other east-west avenues (parallel to Center Avenue) are named based on whether they are north or south of Center Avenue, and how far they are from Center Avenue. Examples of avenues that run east-west are North 3rd and South 15th Avenue.

The other streets in Cartesia run north. Division Street runs north-south through the middle of town. All other north-south streets (parallel to Division Street) are named based on whether they are east or west of Division Street, and how far they are from Division Street.

Of course, the map of Cartesia is an idealization of rectangular plane---there is no limit in space of the Cartesian space, and streets do not have width being drawn through any point.

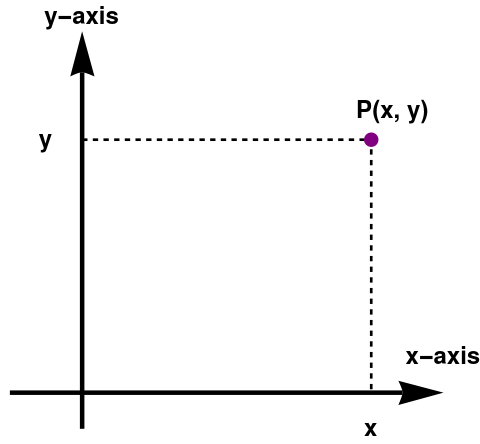

We consider points as elements of Cartesian product 𝔽n = 𝔽 × 𝔽 × ⋯ × 𝔽 of n scalar fields. Then every point P from ℝn has n coordinates that specify its position:

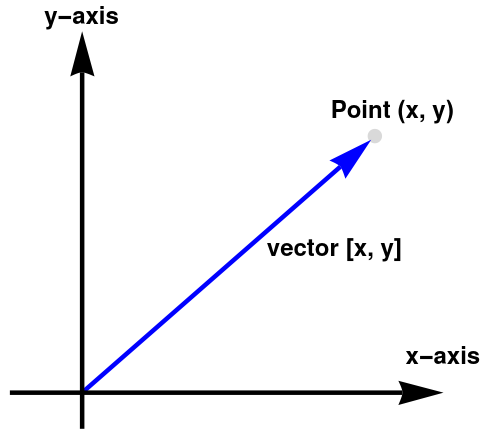

Since positions are relative to some larger frame, points are relative as well— they are relative to the origin of the coordinate system used to specify their coordinates. This leads us to the relationship between points and vectors. The following figure illustrates how the point (x, y) is related to the vector [x, y], given arbitrary values for x and y.

In many cases, displacements are from the origin, and so theew qill be no distinction between points and vectors because they both share the same coordinates. However, we often deal with quantities that are not relative to the origin, or any other point for that matter. In these cases, it is important to visualize these quantities as an arrow rather than a point.

The following construction shows how a Cartesian product 𝔽n of n scalar fields can betransfered into a vector space by introducing arithmetic operations inherited from scalar field.

When we wish to refer to the individual components in a vector, we use subscript notation. In math literature, integer indices are used to access the elements. For example,

Every point on the plane has two coordinates P(x, y) relative to the origin of the coordinate system. This point can also be identified by a vector pointed to it and started from the origin. This means that the vector also can be uniquely identified by the same pair v = [x, y)]. This establishes a one-to-one correspondence between points and vectors.

Vector Properties

The term vector appears in a variety of mathematical and engineering contexts, which we will discuss in Part3 (Vector Spaces). There is no universal notation for vectors because of diversity of their applications. Until then, vector will mean an ordered set (list) of numbers. Generally speaking, concept of vector may include infinite sequence of numbers or other objects. However, in this part of tutorial, we consider only finite lists. There are many ways to represent vectors including columns, rows,

For any given vector dimension, there is a special vector, known as the zero vector, that has zeroes in every position,

These unit vectors can be written in column form

The entries of vectors are integers, but they are suitable only for class presentations by lazy instructors like me. In real life, the set of integers ℤ appears mostly in kindergarten. Therefore, vector entries could be any numbers, for instance, real numbers denoted by ℝ, or complex numbers ℂ. However, humans and computers operate only with rational numbers ℚ as approximations of fields ℝ or ℂ. Although the majority of our presentations involves integers for simplicity, the reader should understand that they can be replaced by arbitrary numbers from either ℝ or ℂ or ℚ. When it does not matter what set of numbers can be utilized, which usually the case, we denote them by 𝔽 and the reader could replace it with any field (either ℝ or ℂ or ℚ).

For our purposes, it is convenient to represent vectors as columns. This allows us to rewrite the given system of algebraic equations in compact form:Remember that the form of vector representation as columns, rows, or n-tuples (parentheses and comma notation) depends on you. However, you must be consistent and use the same notation for addition or scalar multiplication. You cannot add a column vector and a row vector:

Our definition of vectors as lists of numbers includes one very important ingredient---scalars. We primary use either the set of real numbers, denoted by ℝ or the set of complex numbers, denoted by ℂ. However, computers operate only with rational numbers, denoted by ℚ. Since elements from these sets of scalars can be added/subtracted and multiplied/divided (by non zero), they are called fields. Either of these fields is denoted by 𝔽 (meaning either ℝ or ℂ or ℚ).

---->

|

|

|

| Giusto Bellavitis | Michail Ostrogradsky | William Hamilton |

The concept of vector, as we know it today, evolved gradually over a period of more than 200 years. The Italian mathematician, senator, and municipal councilor Giusto Bellavitis (1803--1880) abstracted the basic idea in 1835. The idea of an n-dimensional Euclidean space for n > 3 appeared in a work on the divergence theorem by the Russian mathematician Michail Ostrogradsky (1801--1862) in 1836, in the geometrical tracts of Hermann Grassmann (1809--1877) in the early 1840s, and in a brief paper of Arthur Cayley (1821--1895) in 1846. Unfortunately, the first two authors were virtually ignored in their lifetimes. In particular, the work of Grassmann was quite philosophical and extremely difficult to read. The term vector was introduced by the Irish mathematician, astronomer, and mathematical physicist William Rowan Hamilton (1805--1865) as part of a quaternion.

Vectors can be described also algebraically. Historically, the first vectors were Euclidean vectors that can be expanded through standard basic vectors that are used as coordinates. Then any vector can be uniquely represented by a sequence of scalars called coordinates or components. The set of such ordered n-tuples is denoted by \( \mathbb{R}^n . \) When scalars are complex numbers, the set of ordered n-tuples of complex numbers is denoted by \( \mathbb{C}^n . \) Motivated by these two approaches, we present the general definition of vectors.

Rewriting vectors u and v in column form, we have

- Compute u −v and 3u −2v ---> \[ {\bf u} = \begin{bmatrix} -2 \\ \phantom{-}1 \end{bmatrix} , \quad {\bf v} = \begin{bmatrix} 3 \\ 2 \end{bmatrix} \qquad\mbox{and} \qquad {\bf u} = \begin{bmatrix} \phantom{-}1 \\ -1 \end{bmatrix} , \quad {\bf v} = \begin{bmatrix} \phantom{-}4 \\ -3 \end{bmatrix} . \]

-

Write system of equations that is equivalent to the given vector equation.

-

\[ x_1 \begin{bmatrix} \phantom{-}3 \\ -4 \end{bmatrix} + x_2 \begin{bmatrix} -1 \\ -2 \end{bmatrix} + x_3 \begin{bmatrix} \phantom{-}5 \\ -2 \end{bmatrix} = \begin{bmatrix} \phantom{-}1 \\ -1 \end{bmatrix}; \]

-

\[ x_1 \begin{bmatrix} 3 \\ 0 \\ 2 \end{bmatrix} + x_2 \begin{bmatrix} -1 \\ \phantom{-}3 \\ \phantom{-}5 \end{bmatrix} + x_3 \begin{bmatrix} -2 \\ \phantom{-}7 \\ \phantom{-}2 \end{bmatrix} = \begin{bmatrix} 5 \\ 1 \\ 3 \end{bmatrix}; \]

-

\[ x_1 \begin{bmatrix} 2 \\ 1 \\ 7 \end{bmatrix} + x_2 \begin{bmatrix} \phantom{-}3 \\ -2 \\ -5 \end{bmatrix} + x_3 \begin{bmatrix} \phantom{-}4 \\ -6 \\ \phantom{-}1 \end{bmatrix} = \begin{bmatrix} -5 \\ -4 \\ \phantom{-}2 \end{bmatrix} . \]

-

- Given \( \displaystyle {\bf u} = \begin{bmatrix} 2 \\ 1 \\ 3 \end{bmatrix} , \ {\bf v} = \begin{bmatrix} \phantom{-}3 \\ -1 \\ -5 \end{bmatrix} , \quad\mbox{and} \quad {\bf b} = \begin{bmatrix} -5 \\ 5 \\ h \end{bmatrix} . \) For what value of h is b a linear combination of vectors u and v?

- Given \( \displaystyle {\bf u} = \begin{bmatrix} 4 \\ 3 \\ 1 \end{bmatrix} , \ {\bf v} = \begin{bmatrix} \phantom{-}2 \\ -2 \\ -3 \end{bmatrix} , \quad\mbox{and} \quad {\bf b} = \begin{bmatrix} 8 \\ h \\ 9 \end{bmatrix} . \) For what value of h is b a linear combination of vectors u and v?

-

Rewrite the system of equations in a vector form

\[ \begin{split} 2x_1 - 3 x_2 + 7 x_3 &= -1 , \\ -5 x_1 -2 x_2 - 3 x_3 &= 2 , \\ 3x_1 + 2 x_2 + 4 x_3 &= 3 . \end{split} \]

- Let \( \displaystyle {\bf u} = \begin{bmatrix} 3 \\ 1 \end{bmatrix} , \ {\bf v} = \begin{bmatrix} \phantom{-}2 \\ -2 \end{bmatrix} , \quad\mbox{and} \quad {\bf b} = \begin{bmatrix} h \\ k \end{bmatrix} . \) Show that the linear equation x₁u + x₂v = b has a solution for any values of h and k.

-

Mark each statement True or False.

- Another notation for the vector (1, 2) is \( \displaystyle \begin{bmatrix} 1 \\ 2 \end{bmatrix} . \)

- An example of a linear combination of vectors u and v is 2v.

- Any list of of six complex numbers is a vector in ℂ6.

- The vector 2v results when a vector v + u is added to the vector v − u.

- The solution set of the linear system whose augmented matrix is [ a1 a2 a3 b ] is the same as the solution set of the vector equation x₁a₁ + x₂a₂ + x₃a₃ = b.

- Vector addition

- Tea

- Milk