Gauss--Seidel method

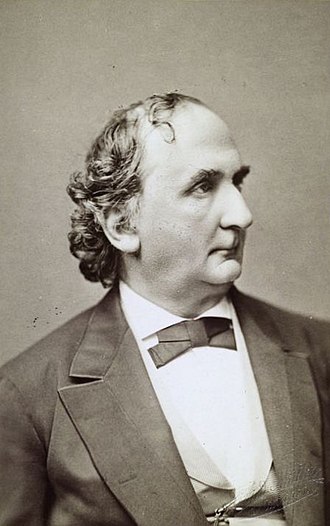

Philipp Ludwig von Seidel worked in analysis, probability theory, astronomy, and optics. Unfortunately, he suffered from eye problems and retired at a young age. The paper in which he described the method now known as Gauss Seidel was published in 1874.

The Gauss-Seidel method, which is a modification of the Jacobi algorithm, uses each new value as soon as it is available. This method is based on splitting the matrix A of Eq.(2) into three parts:

Inverse[S]

Although structure of the inverse to the lower triangular matrix S = Λ + L can be written explicitly, its practical implementation becomes problematic with size of matrix growth. The n × n matrix S−1 contains n divisions, and its entries contain alternated series, which could be a nightmare for numerical evaluations.

Instead of standard approach (5), the Gauss--Seidel method suggests to extract the strictly lower triangular matrix from S,

Now we demonstrate the iterative procedure above in more detail by showing step by step its application for solving the system of linear equations (2). The first coordinate of x(k+1) is given by Jacobi's formula

|

\begin{equation} \label{EqSeidel.8}

x_j^{(k+1)} = \frac{1}{a_{j,j}} \left( b_j - \sum_{i=1}^{j-1} a_{j,i} x_i^{(k+1)} - \sum_{i=j+1}^n a_{1,i} x_i^{(k)} \right) .

\end{equation}

|

|---|

It is interesting to compare the Jacobi and Gauss–Seidel iterations noting that the latter is using the coordinates of x(k+1) as soon as they are calculated rather than in the next iteration. The program for the Gauss–Seidel iteration is actually simpler than the program for the Jacobi iteration. The vectors x(k) and x(k+1) are both stored in the same vector, x. As a coordinate of x(k+1) is calculated, it replaces the corresponding coordinate of x(k).

Here is algorithm for Gauss-Seidel iteration scheme:

algorithm Gauss–Seidel method is

inputs: A, b

output: φ

Choose an initial guess φ to the solution

repeat until convergence

for i from 1 until n do

σ ← 0

for j from 1 until n do

if j ≠ i then

σ ← σ + 𝑎i,jφj

end if

end (j-loop)

φi ← (bi − σ) / 𝑎i,i

end (i-loop)

check if convergence is reached

end (repeat

input A and b

n is the size of A

while precision conditions do

for i = 1 : n do

s = 0

for j = 1 : n do

if j ≠ i then

s = s + 𝑎i,j xj

end if

end for

xi = (1/𝑎i,i) (bi − s)

end for

end while

Here is its mathematica implementation:

b = {12, 4, 14}; LinearSolve[A, b]

If you use column vector b instead of row vector, LinearSolve command should be applied as follows.

Jacobi's algorithm requires extraction of the diagonal matrix A = λ + M, where \[ {\bf A} = \begin{bmatrix} \phantom{-}4 & 2 & -2 \\ -2 & 5 & \phantom{-}2 \\ \phantom{-}4 & 3 & -2 \end{bmatrix} , \qquad \Lambda = \begin{bmatrix} 4 & 0 & \phantom{-}0 \\ 0 & 5 & \phantom{-}0 \\ 0 & 0 & -2 \end{bmatrix} , \quad {\bf M} = \begin{bmatrix} \phantom{-}0 & 2 & -2 \\ -2 & 0 & \phantom{-}2 \\ \phantom{-}4 & 3 & \phantom{-}0 \end{bmatrix} . \]

diag = DiagonalMatrix[{4, 5, -2}]

B = -Inverse[diag] . M

Upon choosing the initial guess to be zero, we obtain the first approximation to be \[ {\bf x}^{(1)} = {\bf d} = \begin{pmatrix} 3 \\ \frac{4}{5} \\ -7 \end{pmatrix} . \] The second iteration step yields \[ {\bf x}^{(2)} = {\bf d} + {\bf B}\,{\bf x}^{(1)} = \begin{pmatrix} - \frac{9}{10} \\ \frac{24}{5} \\ \frac{1}{5} \end{pmatrix} = \begin{pmatrix} -0.9 \\ 4.8 \\ 0.2 \end{pmatrix} . \]

x2 = d + B . d

| Matrix | A | Λ | L | U | b | x0 |

|---|---|---|---|---|---|---|

| \( \displaystyle \begin{pmatrix} 4 & 2 & -2 \\ -2 & 5 & 2 \\ 4 & 3 & -2 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 4 & 0& 0 \\ 0 & 5&0 \\ 0&0& -2 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&0&0 \\ -2&0&0 \\ 4& 3 & 0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&2 & -2 \\ 0&0& 2 \\ 0&0&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 12 \\ 4 \\ 14 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0 \\ 0 \\ 0 \end{pmatrix} \) | |

| Iterations | 0 | 1 | 2 | 3 | 4 | 5 |

| \( \displaystyle \begin{pmatrix} 0&0&0 \end{pmatrix} \) | \( \displaystyle \left( 3, \ \frac{4}{5}, \ -7 \right) \) | \( \displaystyle \left( -\frac{9}{10}, \ \frac{24}{5},\ \frac{1}{5} \right) \) | \( \displaystyle \left( \frac{7}{10},\ \frac{9}{25},\ -\frac{8}{5} \right) \) | \( \displaystyle \left( \frac{101}{50},\ \frac{43}{25},\ -\frac{253}{50} \right) \) | \( \displaystyle \left( -\frac{39}{100},\ \frac{454}{125}, \ -\frac{19}{50} \right) \) |

The errors of Jacobi's iterations at 50-th run are \[ \left\vert x_1^{(50)} -1 \right\vert = 0.039291 , \qquad \left\vert x_2^{(50)} -2 \right\vert = 0.0101124 , \qquad \left\vert x_3^{(50)} +2 \right\vert = 0.069383 . \]

We repeat calculations with 50 runs. It took less than a second to execute the code.

| Matrix | A | Λ | L | U | b | x0 |

|---|---|---|---|---|---|---|

| \( \displaystyle \begin{pmatrix} 4 & 2 & -2 \\ -2 & 5 & 2 \\ 4 & 3 & -2 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 4 & 0& 0 \\ 0 & 5&0 \\ 0&0& -2 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&0&0 \\ -2&0&0 \\ 4& 3 & 0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&2 & -2 \\ 0&0& 2 \\ 0&0&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 12 \\ 4 \\ 14 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0 \\ 0 \\ 0 \end{pmatrix} \) | |

| Iterations | 45 | 46 | 47 | 48 | 49 | 50 |

| {0.950528, 2.01566, -2.08244} |

{0.95095, 2.01319, -2.07545} |

{0.955682, 2.01056, -2.07832} |

{0.95556, 2.0136, -2.0728} |

{0.956802, 2.01134, -2.06848} |

{0.960089, 2.01011, -2.06938} |

Now we apply the Gauss--Seidel method with the same initial choice---the origin. At the first step, we have \begin{align*} x_1^{(1)} &= 3 - \frac{1}{2}\, x_2^{(0)} + \frac{1}{2}\, x_3^{(0)} = 3, \\ x_2^{(1)} &= \frac{4}{5} + \frac{2}{5}\, x_1^{(1)} - \frac{2}{5}\, x_3^{(0)} = 2 \\ x_3^{(1)} &= -7 + 2\,x_1^{(1)} + \frac{3}{2}\,x_2^{(1)} = 2 . \end{align*} Next iteration gives \begin{align*} x_1^{(2)} &= 3 - \frac{1}{2}\, x_2^{(1)} + \frac{1}{2}\, x_3^{(1)} = 3, \\ x_2^{(2)} &= \frac{4}{5} + \frac{2}{5}\, x_1^{(2)} - \frac{2}{5}\, x_3^{(1)} = \frac{6}{5} , \\ x_3^{(2)} &= -7 + 2\,x_1^{(2)} + \frac{3}{2}\,x_2^{(2)} = \frac{4}{5} \end{align*} We manually repeat a few iterations before application of looping procedure. \begin{align*} x_1^{(3)} &= 3 - \frac{1}{2}\, x_2^{(2)} + \frac{1}{2}\, x_3^{(2)} = \frac{14}{5} , \\ x_2^{(3)} &= \frac{4}{5} + \frac{2}{5}\, x_1^{(3)} - \frac{2}{5}\, x_3^{(2)} = \frac{8}{5} , \\ x_3^{(3)} &= -7 + 2\,x_1^{(3)} + \frac{3}{2}\,x_2^{(3)} = 1 , \end{align*} and \begin{align*} x_1^{(4)} &= 3 - \frac{1}{2}\, x_2^{(3)} + \frac{1}{2}\, x_3^{(3)} = \frac{27}{10} , \\ x_2^{(4)} &= \frac{4}{5} + \frac{2}{5}\, x_1^{(3)} - \frac{2}{5}\, x_3^{(3)} = \frac{37}{25} , \\ x_3^{(4)} &= -7 + 2\,x_1^{(4)} + \frac{3}{2}\,x_2^{(4)} = \frac{31}{50} ; \end{align*} \begin{align*} x_1^{(5)} &= 3 - \frac{1}{2}\, x_2^{(4)} + \frac{1}{2}\, x_3^{(4)} = \frac{257}{100} = 2.57 , \\ x_2^{(5)} &= \frac{4}{5} + \frac{2}{5}\, x_1^{(5)} - \frac{2}{5}\, x_3^{(4)} = \frac{79}{50} = 1.58 , \\ x_3^{(5)} &= -7 + 2\,x_1^{(5)} + \frac{3}{2}\,x_2^{(5)} = \frac{51}{100} = 0.51 . \end{align*} Sequential iterates are generated with the following Mathematica code:

| Matrix | A | Λ | L | U | b | x0 |

|---|---|---|---|---|---|---|

| \( \displaystyle \begin{pmatrix} 4 & 2 & -2 \\ -2 & 5 & 2 \\ 4&3&-2 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 4 & 0&0 \\ 0 & 5&0 \\ 0&0& -2 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&0&0 \\ -2&0&0 \\ 4&3&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&2&-2 \\ 0&0&2 \\ 0&0&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 12 \\ 4 \\ 14 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0 \\ 0 \\ 0 \end{pmatrix} \) | |

| Iterations | 0 | 1 | 2 | 3 | 4 | 5 |

| {0, 0, 0} | \( \displaystyle \left\{ 3, \ 2,\ 2 \right\} \) | \( \displaystyle \left\{ 3,\ \frac{6}{5},\ \frac{4}{5} \right\} \) | \( \displaystyle \left\{ \frac{!4}{5},\ \frac{8}{5}, \ 1 \right\} \) | \( \displaystyle \left\{ \frac{27}{10},\ \frac{37}{25},\ \frac{31}{50} \right\} \) | \( \displaystyle \left\{ \frac{257}{100},\ \frac{79}{50},\ \frac{51}{100} \right\} \) |

We repeat GS-iterative procedure with 50 runs. It tAkes less than a second to execute 50 runs.

| Matrix | A | Λ | L | U | b | x0 |

|---|---|---|---|---|---|---|

| \( \displaystyle \begin{pmatrix} 4 & 2 & -2 \\ -2 & 5 & 2 \\ 4&3&-2 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 4 & 0&0 \\ 0 & 5&0 \\ 0&0& -2 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&0&0 \\ -2&0&0 \\ 4&3&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&2&-2 \\ 0&0&2 \\ 0&0&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 12 \\ 4 \\ 14 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0 \\ 0 \\ 0 \end{pmatrix} \) | |

| Iterations | 45 | 46 | 47 | 48 | 49 | 50 |

| {1.08661, 1.97577, -1.86312} |

1.08055, 1.97747, -1.87269} |

{1.07492, 1.97904, -1.88159} |

{1.06968, 1.98051, -1.88987} |

The errors of the Gauss--Seidel iterations at 50-th run are \[ \left\vert x_1^{(50)} -1 \right\vert = 0.0602767 , \qquad \left\vert x_2^{(50)} -2 \right\vert = 0.0181273 , \qquad \left\vert x_3^{(50)} +2 \right\vert = 0.095263 . \] As a result of this numerical experiment we conclude that both methods, Jacobi and GS, are very fast and provide approximately the same accuracy, but Jacobi's iteration scheme gives slightly better approximation. Also, a usage of floating point instead of exact operations with rational numbers does not effect the time of execution.

| Matrix | A | Λ | L | U | b | x0 |

|---|---|---|---|---|---|---|

| \( \displaystyle \begin{pmatrix} 5 & -4 & 1 \\ 5 & 7 & -4 \\ 3&-7&8 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 5 & 0&0 \\ 0 &7&0 \\ 0&0& 8 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&0&0 \\ 5&0&0 \\ 3&-7&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&-4&1 \\ 0&0&-4 \\ 0&0&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 5 \\ 21 \\ -9 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0 \\ 0 \\ 0 \end{pmatrix} \) | |

| Iterations | 0 | 1 | 2 | 3 | 4 | 5 |

| {0, 0, 0} | \( \displaystyle \left\{ 1, \ 3,\ -\frac{9}{8} \right\} \) | \( \displaystyle \left\{ \frac{29}{8},\ \frac{23}{14},\ \frac{9}{8} \right\} \) | \( \displaystyle \left\{ \frac{!17}{56},\ \frac{59}{56}, \ -\frac{67}{64} \right\} \) | \( \displaystyle \left\{ \frac{4597}{2240},\ \frac{713}{784},\ -\frac{221}{224} \right\} \) | \( \displaystyle \left\{ \frac{15091}{7840},\ \frac{3043}{3136},\ -\frac{2813}{2560} \right\} \) |

We repeat calculations with floating point arithmetic for 50 runs.

| Matrix | A | Λ | L | U | b | x0 |

|---|---|---|---|---|---|---|

| \( \displaystyle \begin{pmatrix} 5 & -4 & 1 \\ 5 & 7 & -4 \\ 3&-7&8 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 5 & 0&0 \\ 0 &7&0 \\ 0&0& 8 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&0&0 \\ 5&0&0 \\ 3&-7&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&-4&1 \\ 0&0&-4 \\ 0&0&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 5 \\ 21 \\ -9 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0 \\ 0 \\ 0 \end{pmatrix} \) | |

| Iterations | 0 | 46 | 47 | 48 | 49 | 50 |

| {1.86139, 1.25743, -0.722772} |

{1.86139, 1.25743, -0.722772} |

{1.86139, 1.25743, -0.722772} |

{1.86139, 1.25743, -0.722772} |

{1.86139, 1.25743, -0.722772} |

1.86139, 1.25743, -0.722772} |

This example shows that the Gauss--Seidel method converges very slowly: after 50 runs it gives the answer with about 25% accuracy. So Jacobi's method performs much better.

U =U = {{0, -8, 5, -4}, {0, 0, -5, 3}, {0, 0, 0, -6}, {0, 0, 0, 0}} {{0, -8, 5, -4}, {0, 0, -5, 3}, {0, 0, 0, -6}, {0, 0, 0, 0}};

L = A - U

Eq.(6.3) is used to generate Gauss-Seidel iteration: \[ {\bf x}^{(k+1)} = {\bf L}^{-1} {\bf b} - {\bf L}^{-1} {\bf U}\,{\bf x}^{(k)} \qquad \iff \qquad {\bf x}^{(k+1)} = {\bf d} + {\bf B}\,{\bf x}^{(k)} , \qquad k=0,1,2,\ldots . \tag{6.4} \]

Matrix B has rank 3 and all its eigenvalues are real numbers: λ₁ = 1, λ₂ = 0, λ₃ ≈ -0.874609, λ₄ ≈ 0.181487. Therefore, we do not expect convergence of Gauss-Seidel iterations.

| n | 0 | 1 | 2 | 3 | 4 | 5 | 10 |

|---|---|---|---|---|---|---|---|

| x₁ | 0 | 1 | \( \displaystyle \frac{485}{189} \approx 2.56614 \) | 0.925058 | 2.31112 | 1.08992 | 1.94983 |

| x₂ | 0 | \( \displaystyle -\frac{3}{4} \) | \( \displaystyle -\frac{64}{189} \approx -0.338624 \) | 0.534972 | -0.243334 | 0.434793 | -0.0439163 |

| x₃ | 0 | \( \displaystyle -\frac{6}{7} \) | \( \displaystyle -\frac{95}{441} \approx -0.21542 \) | -0.291808 | -0.291808 | -0.733657 | -0.422114 |

| x₄ | 0 | \( \displaystyle \frac{20}{21} \) | \( \displaystyle \frac{950}{3969} \approx .239355 \) | 0.88474 | 0.324231 | 0.815174 | 0.469016 |

Running GS-iterations 50 times yields

| Matrix | A | Λ | L | U | b | x0 |

|---|---|---|---|---|---|---|

| \( \displaystyle \begin{pmatrix} 9 & -8 & 5& -4 \\ 1 & 8 & -5 &3 \\ -2&-4&7 & -6 \\ 2 & -4 &-5& 6 \\ 2& -4& -5& 6 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 9 & 0&0&0 \\ 0 &8&0 &0 \\ 0&0& 7& 0 \\ 0&0&0&6 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&0&0&0 \\ 1&0&0&0 \\ -2&-4&0&0 \\ 2& -4& -5&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0&-8&5&-4 \\ 0&0&-5&3 \\ 0&0&0&-6 \\ 0&0&0&0 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 9 \\ 7 \\ -11 \\ 9 \end{pmatrix} \) | \( \displaystyle \begin{pmatrix} 0 \\ 0 \\ 0 \\ 0 \end{pmatrix} \) | |

| Iterations | 0 | 46 | 47 | 48 | 49 | 50 |

| {1.65594, 0.119593, -0.528556, 0.587284} |

{1.66096, 0.116801, -0.526738, 0.585265} |

{1.65657, 0.119243, -0.528328, 0.587031} |

{1.66041, 0.117107, -0.526937, 0.585486} |

{1.65705, 0.118975, -0.528154, 0.586837} |

{1.65999, 0.117341, -0.52709, 0.585656} |

Therefore, we conclude that except the first coordinates, all other entries do not converge.

The following table presents Gauss--Seidel iterations with different initial guess.

| n | 0 | 1 | 2 | 3 | 4 | 5 | 10 |

|---|---|---|---|---|---|---|---|

| x₁ | 1.5 | 1.61111 | 2.0751 | 1.81082 | 2.06765 | 1.84769 | 2.00357 |

| x₂ | 0.5 | 0.923611 | 0.778807 | 0.946414 | 0.807257 | 0.930314 | 0.843734 |

| x₃ | 2.5 | 2.41667 | 2.54586 | 2.44308 | 2.53482 | 2.45492 | 2.51133 |

| x₄ | 3.5 | 3.59259 | 3.44905 | 3.56324 | 3.46131 | 3.550083.55008 | 3.48741 |

We repeat calculations with floating point arithmetic for 50 runs.

- Anton, Howard (2005), Elementary Linear Algebra (Applications Version) (9th ed.), Wiley International

- Beezer, R., A First Course in Linear Algebra, 2015.

- Beezer, R., A Second Course in Linear Algebra, 2013.