Duality in matrix/vector multiplication

Elementary Reduction Operations

{{y -> -(5/3) - (2 x)/3}}

{{y -> -(5/3) - (2 x)/3}}

Let us consider a system of m linear equations in standard form with n unknowns

The system in (1) is homogeneous provided (b1, b2, … , bm) = 0.

The entries of the coefficient matrix A may have more complicated form than just being scalars; we will see later that systems of linear equations and corresponding coefficient matrices could be functions depending on some parameters.

A first observation of the system of linear equations (1) or its matrix/vector form (2) is unimportant of notation x in it. If the coefficient matrix A and constant term b are determined by the problem under consideration (or by Nature), notation for unknown variables depends strictly on your proclivity. By the way, Mathematica does not include notation for unknown variable when corresponding command is invoked: LinearSolve[A, b]. Moreover, Mathematica accepts b written as an n-tuple or as a column vector.

Symbolize[NotationTemplateTag[Subscript[x, 1]]];

Symbolize[NotationTemplateTag[Subscript[x, 2]]];

Symbolize[NotationTemplateTag[Subscript[x, 3]]];

Reduce[{2 Subscript[x, 1] + 3 Subscript[x, 2] + 5 Subscript[x, 3] == 3, 3 Subscript[x, 1] - 2 Subscript[x, 2] - 2 Subscript[x, 3] == 1}, {Subscript[x, 1], Subscript[x, 2], Subscript[x, 3]}]

(* this is what you see in notebook: *)

\( \displaystyle \quad \begin{split} 2\,x_1 + 3\, x_2 + 5\, x_3 &= 3 \\ 3\,x_1 - 2\,x_2 - 2\, x_3 &= 1 \end{split} \)

Each equation in (1.1) can be written as dot products a₁ • x = 3 and a₂ • x = 1, where \[ {\bf a}_1 = \left( 2, 3, 5 \right) , \quad {\bf a}_2 = \left( 3, -2, -2 \right) , \] and x = (x₁, x₂, x₃) ∈ ℝ³. The solution set of the equation a • x = b is also a plane in ℝ³.

Symbolize[NotationTemplateTag[Subscript[a, 2]]];

Subscript[a, 1] = {2, 3, 5};

Subscript[a, 2] = {3, -2, -2};

x = {Subscript[x, 1], Subscript[x, 2], Subscript[x, 3]};

Subscript[a, 1] . x

|

|

|

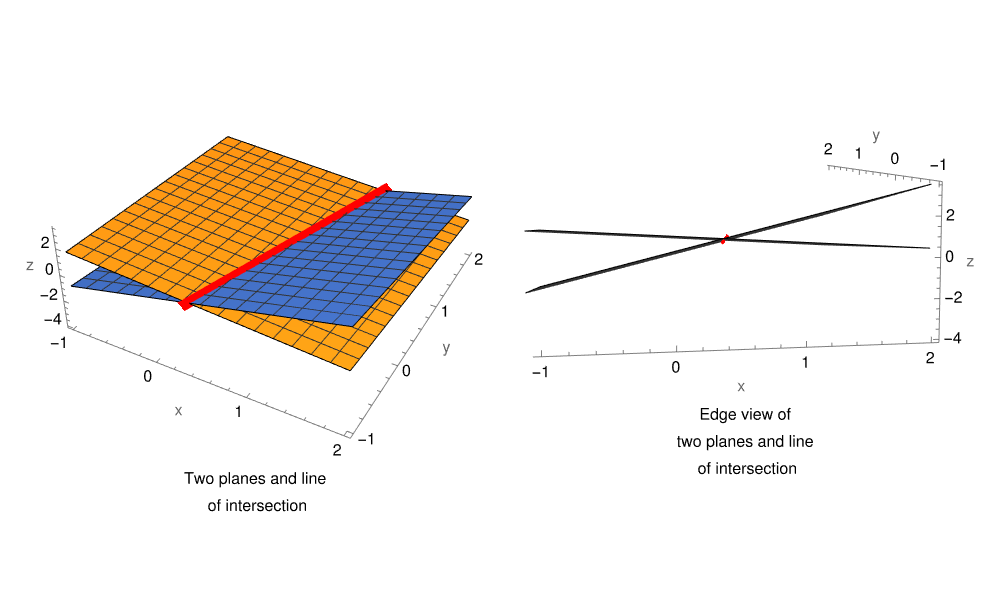

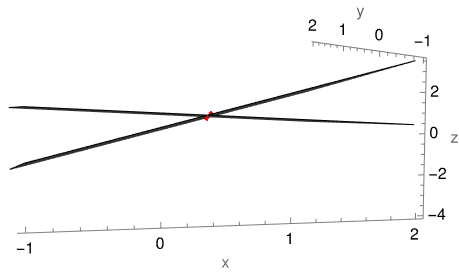

| Intersection of two planes defines a line. | Edge view of two planes and line of intersection. |

g[x_, y_] = (3*x - 2*y - 1)/2;

line = Solve[((3 - 2*x - 3*y)/5) == ((3*x - 2*y - 1)/2), y][[1, 1, 2]]; plt = Plot3D[{f[x, y], g[x, y]}, {x, -1, 2}, {y, -1, 2}, Axes -> True, Boxed -> False, AxesLabel -> {"x", "y", "z"}];

ppdLine = ParametricPlot3D[{x, line, f[x, line]}, {x, -1, 2}, Boxed -> False, PlotStyle -> {Red, Thickness[.025]}];

show1 = Labeled[Show[plt, ppdLine, ImageSize -> 275], "Two planes and line\n of intersection"];

show2 = Labeled[ Show[plt, ppdLine, ImageSize -> 275, ViewPoint -> {-0.6139237514164889, -3.308148189190016, 0.3595179909344441}], "Edge view of\ntwo planes and line\n of intersection"]; GraphicsRow[{show1, show2}]

We convert the given row problem (1.1) into column problem. Let V ⊆ ℝ² be spanned on three vectors: \[ V = \mbox{span}\left\{ \begin{bmatrix} 2 \\ 3 \end{bmatrix} , \ \begin{bmatrix} \phantom{-}3 \\ -2 \end{bmatrix} , \ \begin{bmatrix} \phantom{-}5 \\ -2 \end{bmatrix} \right\} . \] V is a subspace of ℝ², and in fact, it is generated by linear combinations \[ V = \left\{ x_1 \begin{bmatrix} 2 \\ 3 \end{bmatrix} + x_2 \begin{bmatrix} \phantom{-}3 \\ -2 \end{bmatrix} + x_3 \begin{bmatrix} \phantom{-}5 \\ -2 \end{bmatrix} \ : \ x_1 , x_2 , x_3 \mbox{ are real numbers} \right\} . \] System of equations (1.1) has a solution iff the right-hand side vector \( \displaystyle {\bf b} = \begin{bmatrix} 3 \\ 1 \end{bmatrix} \) belongs to V: \[ \begin{bmatrix} 3 \\ 1 \end{bmatrix} = x_1 \begin{bmatrix} 2 \\ 3 \end{bmatrix} + x_2 \begin{bmatrix} \phantom{-}3 \\ -2 \end{bmatrix} + x_3 \begin{bmatrix} \phantom{-}5 \\ -2 \end{bmatrix} . \] Our immediate task is to solve the system of equations (1.1). So we ask Mathematica for help. First, we introduce new variables in order to distinguish our new approach with the previously one.

Symbolize[NotationTemplateTag[Subscript[y, 1]]];

Symbolize[NotationTemplateTag[Subscript[y, 2]]];

Symbolize[NotationTemplateTag[Subscript[y, 3]]];

Solve[{2*Subscript[y, 1] + 3*Subscript[y, 2] + 5*Subscript[y, 3] == 3, 3*Subscript[y, 1] - 2*Subscript[y, 2] - 2*Subscript[y, 3] == 1}, {Subscript[y, 1], Subscript[y, 2]}]

scaledEq1 = (2*# & /@ eq1)(*the equations are scaled by each multiplier*) scaledEq2 = (3*# & /@ eq2)

3 (3 Subscript[y, 1] - 2 Subscript[y, 2] - 2 Subscript[y, 3]) == 3

6

13 Subscript[y, 1] + 4 Subscript[y, 3] == 9

Subscript[solny, 1] = %[[2]]

1/13 (9 - 4 Subscript[y, 3])

======================= Roger ==================

However, we can makes the system (1.2) looks nicer. In order to achive it, we use Chiò's method, invented by the Italian mathematician Felice Chiò (1813--1871) in 1853. At his time, computers wee not available and people did not (and still do not) feel comfortable working with fractions.

First, we observe that there is no need to continue writing the 'unknowns' x₁, x₂, x₃ because we work only with their coefficients. Therefore, we introduce vectors with corresponding coefficients that we abbreviate as \[ {\bf r}_1 = \begin{bmatrix} 2&3&5&3 \end{bmatrix} \qquad\mbox{and} \qquad {\bf r}_2 = \begin{bmatrix} 3&-2&-2&1 \end{bmatrix} . \] We multiply the first vector r₁ by 3 and subtract the second vectorr₂ multiplied by 2. This yields \[ 3\,{\bf r}_1 = \begin{bmatrix} 6&9&15&9 \end{bmatrix} \qquad\mbox{and} \qquad 2\,{\bf r}_2 = \begin{bmatrix} 6&-4&-4&2 \end{bmatrix} . \] So \[ {\bf w} = 3\,{\bf r}_1 - 2\,{\bf r}_2 = \begin{bmatrix} 0&13&19&7 \end{bmatrix} . \] Now we compare the second components of vectors w and r₂, we can make them equal upon multiplication w vt 2 and r₂, by 13: \[ 2\,{\bf w} = \begin{bmatrix} 0&26&38&14 \end{bmatrix} , \qquad 13\,{\bf r}_2 = \begin{bmatrix} 39&-26&-26&13 \end{bmatrix} . \] Adding these two vectors, we obtain \[ {\bf u} = 2\,{\bf w} + 13\,{\bf r}_2 = \begin{bmatrix} 39&0&12&27 \end{bmatrix} . \] Since system of equations corresponding vectors r₁ and r₂ is equivalent to the system of equations generated by vectors u and w, we claim that our system of equations is equivalent to the following system: \[ \begin{split} 0\,x_1 + 13\, x_2 + 19\, x_3 &= 7, \\ 39\,x_1 + 0\,x_2 + 12\, x_3 &= 27 . \end{split} \tag{1.4} \] Each of equations in (1.4) contains only two variables, so we can determine one of them: \[ 13\, x_2 = 7 - 19\, x_3 , \qquad 39\, x_1 = 27 - 12 \, x_3 \quad \iff \quad 13\, x_1 = 9 - 4\, x_ 3 . \] We can use the same approach eliminating variables in each equation of system (1.2). Again we start with vectors r₁ and r₂, but now eliminate variables x₁ and x₃. We can use vector w because its first component is zero. Comparing its third component (19) and the third component (−2) of r₂, we see that we need to build another vector \[ {\bf v} = 2\,{\bf w} + 19\,{\bf r}_2 = \begin{bmatrix} 39&12&0&33 \end{bmatrix} . \] Then the system of equations w, v contains only two unknown variables in equa equation \[ \begin{split} 0\,x_1 + 13\, x_2 + 19\, x_3 &= 7, \\ 39\,x_1 + 12\,x_2 + 0\, x_3 &= 33 . \end{split} \tag{1.5} \] Solving this system with respect to x₁ and x₃, we obtain \[ 13\, x_1 = 11 - 4\, x_2 , \qquad 19\, x_3 = 7 - 13\, x_ 2 , \] where x₂ is a free variable.

=================== to be checked ==============

\[ \begin{split} 0\,x_1 + 78\, x_2 + 114\, x_3 &= 42, \\ 13\,x_1 + 0\,x_2 + 4\, x_3 &= 9 . \end{split} \tag{1.3} \]Now we multiply the first equation by 2 and the second one by 5. Upon adding the results, we obtain \[ \begin{split} 4\,x_1 + 6\, x_2 + 10\, x_3 &= 6, \\ 19\,x_1 - 4\,x_2 &= 11 . \end{split} \] Solving for x₁ and then substitute into the first equation, we get the general solution \[ x_1 = \frac{1}{19} \left( 11 + 4\, x_2 \right) , \quad x_3 = \frac{1}{19} \left( 7 - 13\, x_2 \right) \] We verify with Mathematica:

There are three fundamentally different ways of interpreting any linear system (1):

-

Every system of linear equations (1) can be considered as a linear transformation T : 𝔽n ⇾ 𝔽m represented by the coefficient matrix A = [𝑎i,j] that maps x ∈ 𝔽n into 𝔽m:

\[ T({\bf x}) = \begin{pmatrix} a_{1,1} x_1 + a_{1,2} x_2 + \cdots + a_{1,n} x_n \\ \vdots \\ a_{m,1} x_1 + a_{m,2} x_2 + \cdots + a_{m,n} x_n \end{pmatrix} \in \mathbb{F}^m , \]where output is written in column form to save space and x = (x1, x2, … , xn) ∈ 𝔽n. Then solving linear system (1) is equivalent to determination of all (if any) inverse images of b under transformation T. If we rewrite vectors x and b in column form, we can define the linear transformation T in matrix/vector form:\[ {\bf A}\,{\bf x} = {\bf b} , \qquad {\bf A} \in \mathbb{F}^{m\times n} , \quad {\bf x} \in \mathbb{F}^{n\times 1} . \quad {\bf b} \in \mathbb{F}^{m\times 1} . \]

- Column problem: Find all ways to linearly combine the columns of A to produce b ∈ 𝔽m×1. This is a problem about linear combinations in the range 𝔽m×1.

-

Row problem: Find all vectors in the domain 𝔽n that simultaneously solve each of the linear equation

\[ < {\bf r}_i \mid {\bf x} > = a_{i,1} x_1 + a_{i,2} x_2 + \cdots + a_{i,n} x_n = b_i , \quad i =1, 2, \ldots , m, \]for each row ri(A) of matrix A. Equivalently, find all vectors in the intersection of the solution sets of all these equations <ri(A)| x> = bi, i = 1, 2, … , m. Geometrically, these sets are hyperspaces and they all lie in 𝔽n.

Theorem 1: Every system of linear equations has either no solution, exactly one solution, or infinitely many solutions.

If A x = b is the matrix form of the linear system, where A ∈ 𝔽m×n, x ∈ 𝔽n×1, and b ∈ 𝔽m×1, then there exist two distinct solutions of the linear system, which means that there exist two distinct solutions x₁ ≠ x₂ ∈ 𝔽n×1 such that A x₁ = b and A x₂ = b, Then for any constant k ∈ 𝔽, it is true that \[ {\bf A} \left( k\, x_1 + (1-k)\, x_2 \right) = k\, {\bf A}\,{\bf x}_1 + \left( 1- k \right) {\bf A}\,{\bf x}_2 = k\, {\bf b} + \left( 1- k \right) {\bf b} = {\bf b} . \] Hence, every linear combination of two vectors is a solution of the linear system. Since there are infinitely many such vectors (one for each choice of k ∈ 𝔽, the proof is completed.

So we can also read the system as a problem about linear combinations of the columns of A. In this case, for instance, A x = b becomes a column problem \[ x \begin{pmatrix} 1 \\ 3 \end{pmatrix} + y \begin{pmatrix} -2 \\ 5 \end{pmatrix} + z \begin{pmatrix} 3 \\ -2 \end{pmatrix} = \begin{pmatrix} -7 \\ 23 \end{pmatrix} , \] or \[ x\,{\bf a}_1 + y\,{\bf a}_2 + z\,{\bf a}_2 = {\bf b} , \] where \[ {\bf a}_1 = \begin{pmatrix} 1 \\ 3 \end{pmatrix} , \quad {\bf a}_2 = \begin{pmatrix} -2 \\ 5 \end{pmatrix} , \quad {\bf a}_3 = \begin{pmatrix} 3 \\ -2 \end{pmatrix} \] denotes column j of matrix A. Seen in this light, solving the linear system means finding a linear combination of the vectors {a1, a2, a3} that adds up to (−7, 23).

Each individual equation in the system (4.1) has the form ri(A) • (x, y, z) = bi, i = 1, 2, where ri(A) denotes row i of A (and bi is the ith entry in b ). In particular, \[ {\bf r}_1 ({\bf A}) = \left( 1, -2, 3 \right) , \quad {\bf r}_2 ({\bf A}) = \left( 3, 5, -2 \right) . \] We could write the 2-by-3 system (1.1) as \[ \begin{split} \left( 1, -2, 3 \right) \bullet (x, y, z) &= -7 , \\ \left( 3, 5, -2 \right) ) \bullet (x, y, z) &= 23 . \end{split} \] So from the row standpoint, the problem A x = b becomes: Find all vectors in ℝ³ (if any) that dot with each row of A to give the corresponding entry in b ∈ ℝ². This is the row problem. It is dual to the column problem.

What we need is a description of the solution set that makes the contents apparent. To get such a description, we may need to reformulate the problem in such a way that the general solution remains the same but its description becomes lucid. The key idea is the following:

In order to develop an efficient algorithm for solving linear systems of equations, we need to determine operations on the systems that do not change the solution sets. First, we observe that the system formulated in row form admits interchanging the order of summation. This is also related to reindexing the variables. For example, the following two systems are equivalent because they define the same general solution:

If you multiply any particular variable by a constant (making substitution xi = c·yi), this yields a corresponding division by c of the column in the coefficient matrix. For instance, using the previous example, we can divide the second column by 2 by making a corresponding scaling in the second variable:

Now we turn our attention to the row-problem. Obviously, the order of presenting equations in the system does not matter and any two or more equations can be exchanged without altering a solution set. Surprisingly, there is one more familiar operation on equations in system (1) that is safe to apply: any equation can be replaced by a linear combination of all other equations. A basic operation over the equations of a linear equation system is the linear combination.

Theorem 2: Every solution of a system of equations is also a solution of every linear combination of the equations of the system.

Let us name the m equations of a system with the symbols E₁ , E₂ , … , Em. The notations simplify the indication of the linear combinations; the expression c₁E₁ + c₂E₂ means: multiply the equation E₁ by c₁, the equation E₂ by c₂, and add. For example, let us consider the system of two equations

Corollary 1: If the equation Ei in the system (1) is a linear combination of the remaining equations, the system is equivalent to that obtained by eliminating the equation Ei.

Note: Although systems with different number of equations can be equivalent, their corresponding augmented matrices are NOT equivalent because the equivalence relation between matrices is applicable only to those matrices that have the same dimensions.

However, a linear combination condition can be splitted into two simple operations on the equations not only for pedagogical purposes, but for computational reason: it is easier to code using simple operations. Then together with swapping equations, we obtain three basic operations on a system of linear equations that are safe to perform:

| E1 (Interchange): | Interchange two equations (denoted by Ei ↕ Ej for swapping rows i and j). | |

| E2 (Scaling): | Multiply both sides of an equation by a non-zero constant (abbreviation: Ei ← c·Ei). | |

| E3 (Replacement): | Replace one equation by the sum of itself and a constant multiple of another equation (Ei ← Ei + c·Ej). |

Theorem 3: If a linear system is changed to another by one of the elementary reduction operations, then the two systems have the same set of solutions.

E2: Take any linear system of m equations in n unknowns, and let S be the solution set of the system. Let ri • x = bi be an equation in the system, let c be a nonzero real number, and suppose that a new linear system is formed by replacing the equation ri • x = bi with the equation c·ri • x = c·bi (leaving the other equations unchanged). Let S₁ be the solution set of the modified system. Now if y ∈ S, then y satisfies all the equations in the original system including the equation ri • x = bi. Hence, y satisfies the equation c·ri • x = c·bi, and all the other equations, so y ∈ S₁. On the other hand, if y ∈ S₁, then y satisfies the equation c·ri • x = c·bi together with the other equations in the original system. Since c ≠ 0, c·ri • x = c·bi implies (1/c)·c·ri • x = (1/c)·c·bi and hence ri • x = bi. Thus, y satisfies all the equations in the original system, so y ∈ S. This means that S₁ ⊆ S, so S₁ = S, and the systems of equations are equivalent.

E3: Let S be the solution set of the original system of equations. Let ri • x = bi and rj • x = bj be two equations in the system, let d be a real number, and suppose a new linear system is formed by replacing the equation ri • x = bi with the equation d·rj • x + ri • x = d·bj + bi (leaving the rest of the equations unchanged). Let S₂ be the solution set of the modified system. Now if y ∈ S, then y satisfies all the equations in the original system, including the equations ri • x = bi and rj • x = bj. Thus, ri • y = bi and rj • y = bj, so d·rj • y + ri • y = d·bj + bi. Hence, y satisfies all the equations in the modified system. Thus, y ∈ S₂ and we have S ⊆ S₂.

On the other hand, if y ∈ S₂, then y satisfies the equation d·rj • x + ri • x = d·bj + bi together with the other equations in the original system. In particular, y satisfies the original system equations rj • y = bj. So \[ \left[ d\,{\bf r}_j \bullet {\bf y} + {\bf r}_i \bullet {\bf y} \right] = \left[ d\,b_j + b_i \right] \] and \[ {\bf r}_j \bullet {\bf y} = b_j , \] where brackets are used to indicate groupings, and so we must have \[ -d \,{\bf r}_j \bullet {\bf y} + \left[ d\,{\bf r}_j \bullet {\bf y} + {\bf r}_i \bullet {\bf y} \right] = - d\,b_j + \left[ d\,b_j + b_i \right] . \] This yields rj • y = bj. Hence, the vector y satisfies all the equations in the original system, so y ∈ S. Thus, S₂ ⊆ S; hence S₂ = S, and the systems of equations are equivalent.

For further simplification, we multiply the second equation by (−3) and add to the first one. As a result, we get \[ \begin{array}{c} \mbox{E}_1 - 3\left( \mbox{E}_1 + \mbox{E}_2 \right) \ : \\ \mbox{E}_1 + \mbox{E}_2 \ : \end{array} \qquad \begin{split} -11\,x_1 + 2\, x_2 + 0\, x_3 &= -7, \\ 4\,x_1 + 0\,x_2 + 1\, x_3 &= 3 . \end{split} \tag{5.2} \] Although system (5.2) is still 2 × 3 system, each of the equations contains only two unknowns. This allows us to explicitly express its general solution \[ x_2 = \frac{1}{2} \left( 11\, x_1 - 7 \right) , \qquad x_3 = 3 - 4\, x_1 . \tag{5.3} \] In formula (5.3), variable x₁ is a free variable, which we denote by t: \[ \begin{bmatrix} x_1 \\ x_2 \\ x_3 \end{bmatrix} = \begin{bmatrix} 0 \\ -\frac{7}{2} \\ 3 \end{bmatrix} + t\, \begin{bmatrix} 1 \\ \frac{11}{2} \\ -4 \end{bmatrix} , \qquad t \in \mathbb{R} . \tag{5.4} \] We verify with Mathematica

b = {2, 1};

LinearSolve[A, b]

Then we swap the first two columns that leads to another coefficient matrix.

LinearSolve[B, b]

b = {2, 1};

LinearSolve[A2, b]

These operations (E1, E2, E3), known as elementary reduction operations, do not alter the set of solutions since the restrictions on the variables x1, x2, … , xn given by the new equations imply the restrictions given by the old ones (that is, we can undo the manipulations made to retrieve the old system). Therefore, these three row operations are reversible.

Indeed, these elementary operations are invertible because they can be undone by other elementary operations of the same type. In particular, the multiplication operation c·Ej is reversed by (1/c)·Ej, the swap operation of equations i and j, Ei ↕ Ej, is undone by itself, and the addition operation Ei + c·Ej is undone by Ri − c·Ej. This reversibility of elementary operations ensures that, not only are solutions to the linear system not lost when equations are modified, but no new solutions are introduced either (since no solutions are lost when undoing the elementary operations). In fact, this is exactly why we require c ≠ 0 in the multiplication operation c·Ej.

Joe multiplied the first equation in (6.J) by (−2) and add to the second one: \[ \begin{split} -2\,x_1 + 6\, x_2 &= 14, \\ 2\,x_1 + x_2 &= \phantom{-}7 \end{split} \qquad \Longrightarrow \qquad \begin{split} x_1 - 3\, x_2 &= -7, \\ 0\,x_1 + 7\,x_2 &= 21 . \end{split} \tag{J.2} \] The last equation contains zero multiplied by x₁; in this case, we say that x₁ is eliminated and we immediately read off the value of another variable: x₂ = 3.

Having x₂ = 3 at hand, Joe substituted its value in the first equation \[ x_1 - 3\cdot 3 = -7 \qquad \Longrightarrow \qquad x_1 = 2 . \]

On the other hand, Donald multiplied the second equation by 3, which does not change its solution set \[ \begin{split} x - 3\, y &= -7, \\ 6\,x + 3\,y &= 21 . \end{split} \] If we add theses two equations, it will give a simpler equation \[ 7\, x + 0\,y = 14 \qquad \Longrightarrow \qquad x = 2 . \] So performing some elementary operations, we transfer the given system to such a system that allows us determine one of the solutions by simple inspection.

- Anton, Howard (2005), Elementary Linear Algebra (Applications Version) (9th ed.), Wiley International

- Beezer, R., A First Course in Linear Algebra, 2015.

- Beezer, R., A Second Course in Linear Algebra, 2013.