Norms

In order to define how close two vectors are, and in order to define the convergence of sequences of vectors, mathematicians use a special device, called metric (which is actually a distance). However, since we need to incorporate vector additions, a metric in vector spaces is generated by a norm.Let V be a vector space over a field 𝔽, where 𝔽 is either the field ℝ of reals, or the field ℚ of rational numbers, or the field ℂ of complex numbers. A norm on V is a function ∥ ∥ : V → ℝ+ = { r∈ℝ : r ≥ 0 }, assigning a nonnegative real number ∥u∥ to any vector u ∈ V, and satisfying the following conditions for all x, y, z ∈ V:

- Positivity: ∥x∥ ≥ 0 and ∥x∥ = 0 if and only if x = 0.

- Homogeneity: ∥kx∥ = |k|·∥x∥.

- Triangle inequality: ∥x+y∥ ≤ ∥x∥ + ∥y∥.

Out of many possible norms, we mention four the most important norms:

-

For every x = [x1, x2, … , xn] ∈ V, we have the 1-norm:

\[ \| {\bf x}\|_1 = \sum_{k=1}^n | x_k | = |x_1 | + |x_2 | + \cdots + |x_n |. \]It is also called the Taxicab norm or Manhattan norm.

-

The Euclidean norm or ℓ²-norm is

\[ \| {\bf x}\|_2 = \left( \sum_{k=1}^n x_k^2 \right)^{1/2} = \left( x_1^2 + x_2^2 + \cdots + x_n^2 \right)^{1/2} . \]

-

The Chebyshev norm or sup-norm ‖v‖∞, is defined such that

\[ \| {\bf x}\|_{\infty} = \max_{1 \le k \le n} \left\{ | x_k | \right\} . \]

-

The ℓp-norm (for p≥1)

\[ \| {\bf x}\|_p = \left( \sum_{k=1}^n x_k^p \right)^{1/p} = \left( x_1^p + x_2^p + \cdots + x_n^p \right)^{1/p} . \]

- \( \displaystyle \| {\bf x} \|_{\infty} \le \| {\bf x} \|_{1} \le n\,\| {\bf x} \|_{\infty} , \)

- \( \displaystyle \| {\bf x} \|_{\infty} \le \| {\bf x} \|_{2} \le \sqrt{n}\,\| {\bf x} \|_{\infty} , \)

- \( \displaystyle \| {\bf x} \|_{2} \le \| {\bf x} \|_{1} \le \sqrt{n}\,\| {\bf x} \|_{2} .\)

With dot product, we can assign a length of a vector, which is also called the Euclidean norm or 2-norm:

Dot product is a particular case of more general bilinear form, known as inner product. An inner product space is a vector space with an additional structure called an inner product. So every inner product space inherits the Euclidean norm and becomes a metric space. In linear algebra, functional analysis, and related areas of mathematics, a norm is a function that assigns a strictly positive length or size to each vector in a vector space—save for the zero vector, which is assigned a length of zero.

The definition of norm in ℂn needs an accormodation of complex conjugate numbers that are denoted either by overline (mathematics) or asterisk (physics and engineering):

For any norm, the Cauchy--Bunyakovsky--Schwarz (or simply CBS) inequality holds:

|

|

|

||

|---|---|---|---|---|

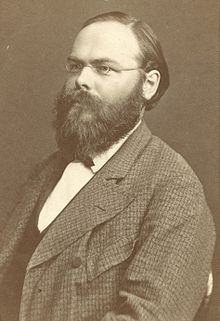

| Augustin-Louis Cauchy | Viktor Yakovlevich Bunyakovsky | Hermann Amandus Schwarz |

The inequality \eqref{EqVector.1} for sums was published by Augustin-Louis Cauchy (1789--1857) in 1821, while the corresponding inequality for integrals was first proved by Viktor Yakovlevich Bunyakovsky (1804--1889) in 1859. The modern proof (which is actually a repetition of the Bunyakovsky's one) of the integral inequality was given by Hermann Amandus Schwarz (1843--1921) in 1888. ■